7 Analysis of variance

This chapter is primarily based on Field, A., Miles J., & Field, Z. (2012): Discovering Statistics Using R. Sage Publications, chapters 10 & 12.

7.1 Introduction

In the previous section we learned how to compare means using a t-test. The t-test has some limitations since it only lets you compare 2 means and you can only use it with one independent variable. However, often we would like to compare means from 3 or more groups. In addition, there may be instances in which you manipulate more than one independent variable. For these applications, ANOVA (ANalysis Of VAriance) can be used. Hence, to conduct ANOVA you need:

- A metric dependent variable (i.e., measured using an interval or ratio scale)

- One or more non-metric (categorical) independent variables (also called factors)

A treatment is a particular combination of factor levels, or categories. One-way ANOVA is used when there is only one categorical variable (factor). In this case, a treatment is the same as a factor level. N-way ANOVA is used with two or more factors.

Let’s use an example to see how ANOVA works. Assume that you are a marketing manager at an online fashion store, and you wish to analyze the effect of online promotion on sales. You conduct an experiment and select a sample of 30 comparable products to be included in the experiment. Then you randomly assign the products to one of three groups: (1) = high promotion level, (2) = medium promotion level, (3) = low promotion level, and record the sales over one day. This means that you have 10 products assigned to each treatment.

As always, we load and inspect the data first:

online_store_promo <- read.table("https://raw.githubusercontent.com/IMSMWU/Teaching/master/MRDA2017/online_store_promo.dat",

sep = "\t", header = TRUE) #read in data

online_store_promo$Promotion <- factor(online_store_promo$Promotion,

levels = c(1:3), labels = c("high", "medium", "low")) #convert grouping variable to factor

str(online_store_promo) #inspect data## 'data.frame': 30 obs. of 4 variables:

## $ Obs : int 1 2 3 4 5 6 7 8 9 10 ...

## $ Promotion : Factor w/ 3 levels "high","medium",..: 1 1 1 1 1 1 1 1 1 1 ...

## $ Newsletter: int 1 1 1 1 1 0 0 0 0 0 ...

## $ Sales : int 10 9 10 8 9 8 9 7 7 6 ...print(online_store_promo) #inspect data## Obs Promotion Newsletter Sales

## 1 1 high 1 10

## 2 2 high 1 9

## 3 3 high 1 10

## 4 4 high 1 8

## 5 5 high 1 9

## 6 6 high 0 8

## 7 7 high 0 9

## 8 8 high 0 7

## 9 9 high 0 7

## 10 10 high 0 6

## 11 1 medium 1 8

## 12 2 medium 1 8

## 13 3 medium 1 7

## 14 4 medium 1 9

## 15 5 medium 1 6

## 16 6 medium 0 4

## 17 7 medium 0 5

## 18 8 medium 0 5

## 19 9 medium 0 6

## 20 10 medium 0 4

## 21 1 low 1 5

## 22 2 low 1 7

## 23 3 low 1 6

## 24 4 low 1 4

## 25 5 low 1 5

## 26 6 low 0 2

## 27 7 low 0 3

## 28 8 low 0 2

## 29 9 low 0 1

## 30 10 low 0 2The null hypothesis, typically, is that all means are equal (non-directional hypothesis). Hence, in our case:

\(H_0: \mu_1 = \mu_2 = \mu_3\)

To get a first impression if there are any differences in sales across the experimental groups, we use the describeBy(...) function from the psych package:

library(psych)

describeBy(online_store_promo$Sales, online_store_promo$Promotion) #inspect data##

## Descriptive statistics by group

## group: high

## vars n mean sd median trimmed mad min max range skew kurtosis se

## X1 1 10 8.3 1.34 8.5 8.38 1.48 6 10 4 -0.24 -1.4 0.42

## --------------------------------------------------------

## group: medium

## vars n mean sd median trimmed mad min max range skew kurtosis se

## X1 1 10 6.2 1.75 6 6.12 2.22 4 9 5 0.17 -1.58 0.55

## --------------------------------------------------------

## group: low

## vars n mean sd median trimmed mad min max range skew kurtosis se

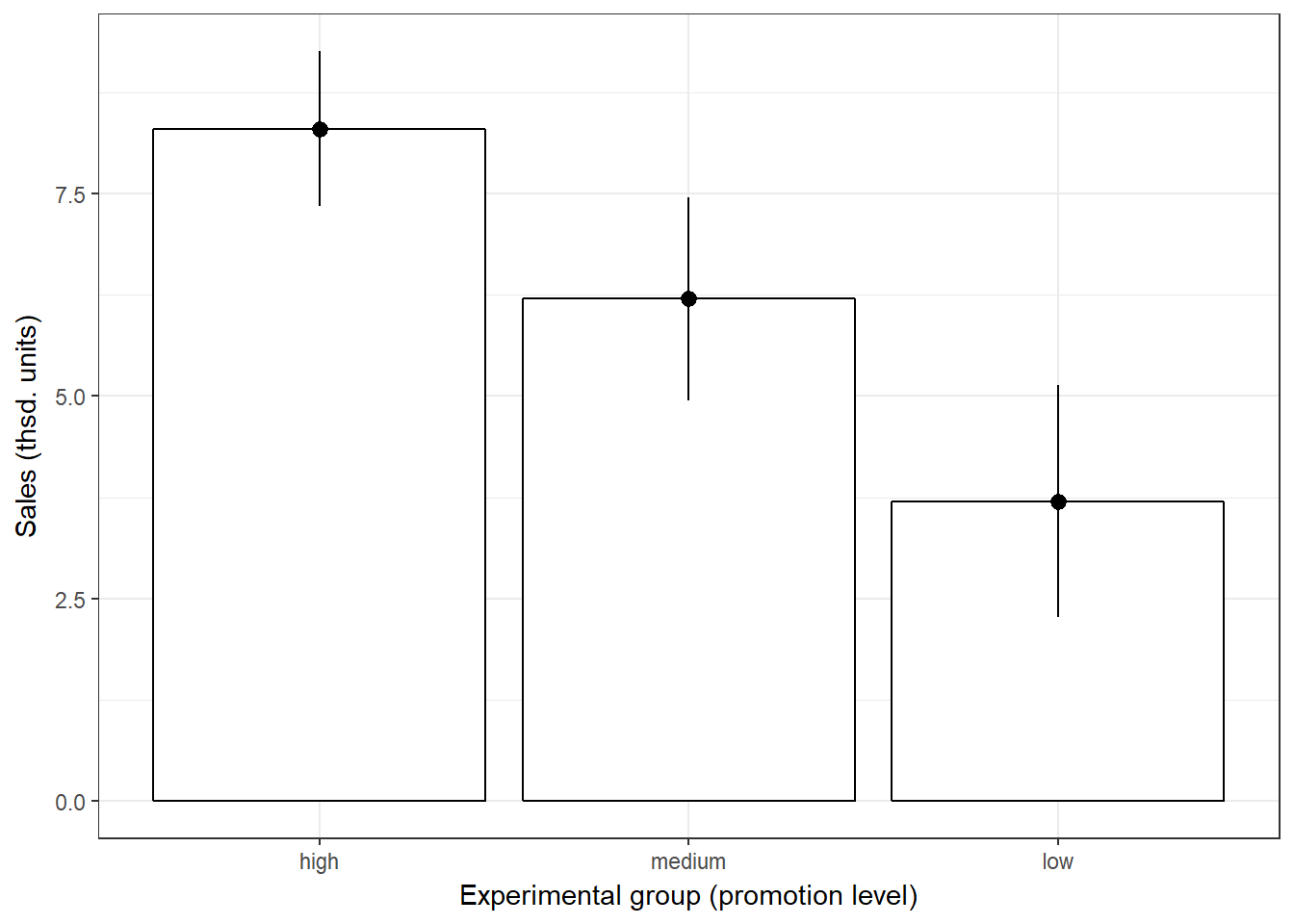

## X1 1 10 3.7 2 3.5 3.62 2.22 1 7 6 0.22 -1.57 0.63In addition, you should visualize the data using appropriate plots:

#Plot of means

library(plyr)

library(ggplot2)

ggplot(online_store_promo, aes(Promotion, Sales)) +

stat_summary(fun.y = mean, geom = "bar", fill = "White", colour = "Black") +

stat_summary(fun.data = mean_cl_normal, geom = "pointrange") +

labs(x = "Experimental group (promotion level)", y = "Sales (thsd. units)") +

theme_bw()

Figure 7.1: Plot of means

Note that ANOVA is an omnibus test, which means that we test for an overall difference between groups. Hence, the test will only tell you if the group means are different, but it won’t tell you exactly which groups are differnt from another.

So why don’t we then just conduct a series of t-tests for all combinations of groups (i.e., high vs. low, low vs. medium, medium vs. high)? The reason is that if we assume each test to be independent, then there is a 5% probability of falsely rejecting the null hypothesis (Type I error) for each test. In our case:

- High vs. low (α = 0.05)

- High vs. medium (α = 0.05)

- Medium vs. low (α = 0.05)

This means that the overall probability of making a Type I error is 1-(0.953) = 0.143, since the probability of no Type I error is 0.95 for each of the three tests. Consequently, the Type I error probability would be 14.3%, which is above the conventional standard of 5%. This is also known as the familywise or experimentwise error.

7.2 Decomposing variance

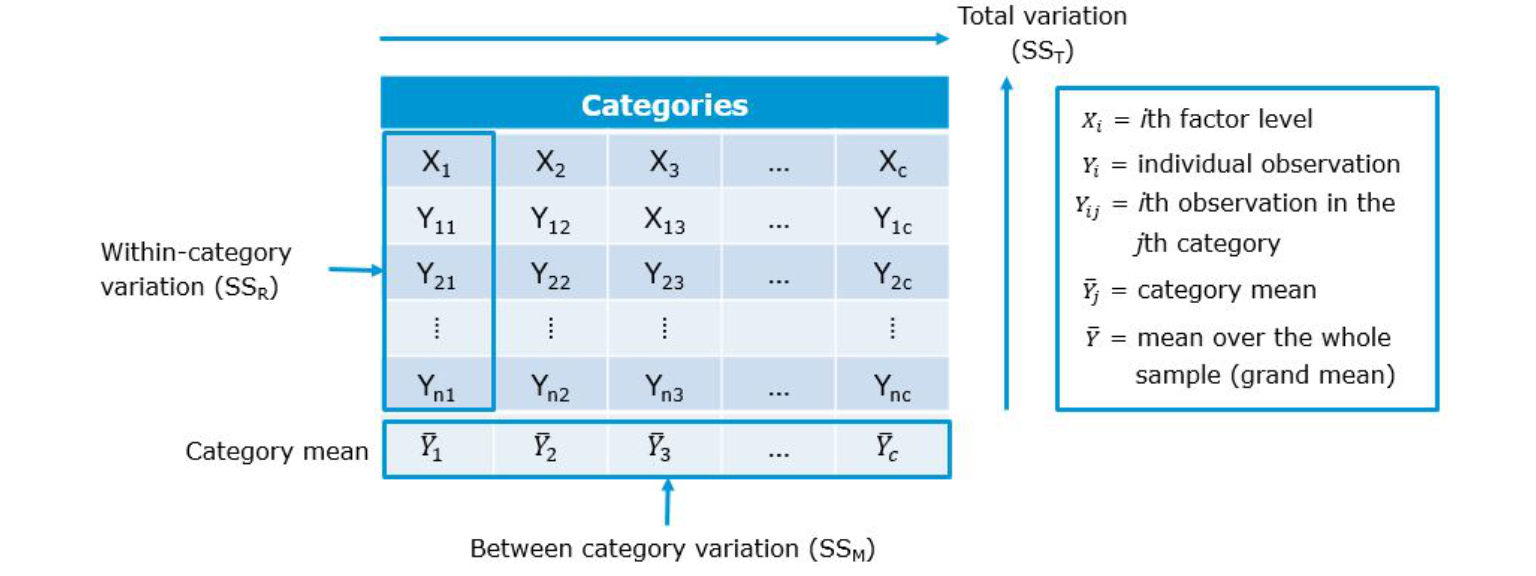

The basic concept underlying ANOVA is the decomposition of the variance in the data. There are three variance components which we need to consider:

- We calculate how much variability there is between scores: Total sum of squares (SST)

- We then calculate how much of this variability can be explained by the model we fit to the data (i.e., how much variability is due to the experimental manipulation): Model sum of squares (SSM)

- … and how much cannot be explained (i.e., how much variability is due to individual differences in performance): Residual sum of squares (SSR)

The following figure shows the different variance components using a generalized data matrix:

Figure 7.2: Decomposing variance

The total variation is determined by the variation between the categories (due to our experimental manipulation) and the within-category variation that is due to extraneous factors (e.g., differences between the products that are included in each group):

\[\begin{equation} \begin{split} SS_T= SS_M+SS_R \end{split} \tag{7.1} \end{equation}\]To get a better feeling how this relates to our data set, we can look at the data in a slightly different way. Specifically, we can use the dcast(...) function from the reshape2 package to convert the data to wide format:

library(reshape2)

dcast(online_store_promo, Obs ~ Promotion, value.var = "Sales")In this example, X1 from the generalized data matrix above would refer to the factor level “high”, X2 to the level “medium”, and X3 to the level “low”. Y11 refers to the first data point in the first row (i.e., “10”), Y12 to the second data point in the first row (i.e., “8”), etc.. The grand mean (\(\overline{Y}\)) and the category means (\(\overline{Y}_c\)) can be easily computed:

mean(online_store_promo$Sales) #grand mean## [1] 6.066667by(online_store_promo$Sales, online_store_promo$Promotion,

mean) #category mean## online_store_promo$Promotion: high

## [1] 8.3

## --------------------------------------------------------

## online_store_promo$Promotion: medium

## [1] 6.2

## --------------------------------------------------------

## online_store_promo$Promotion: low

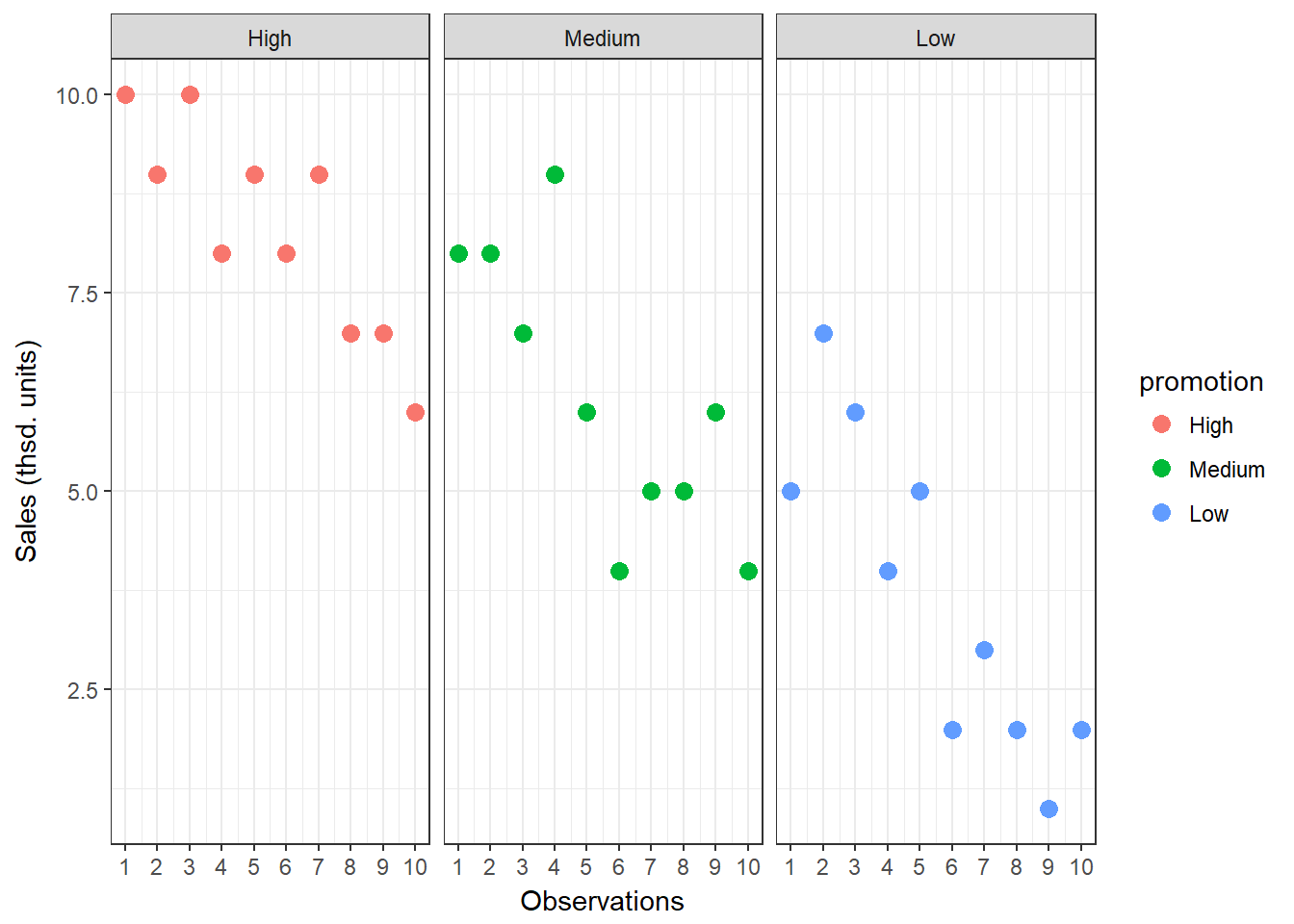

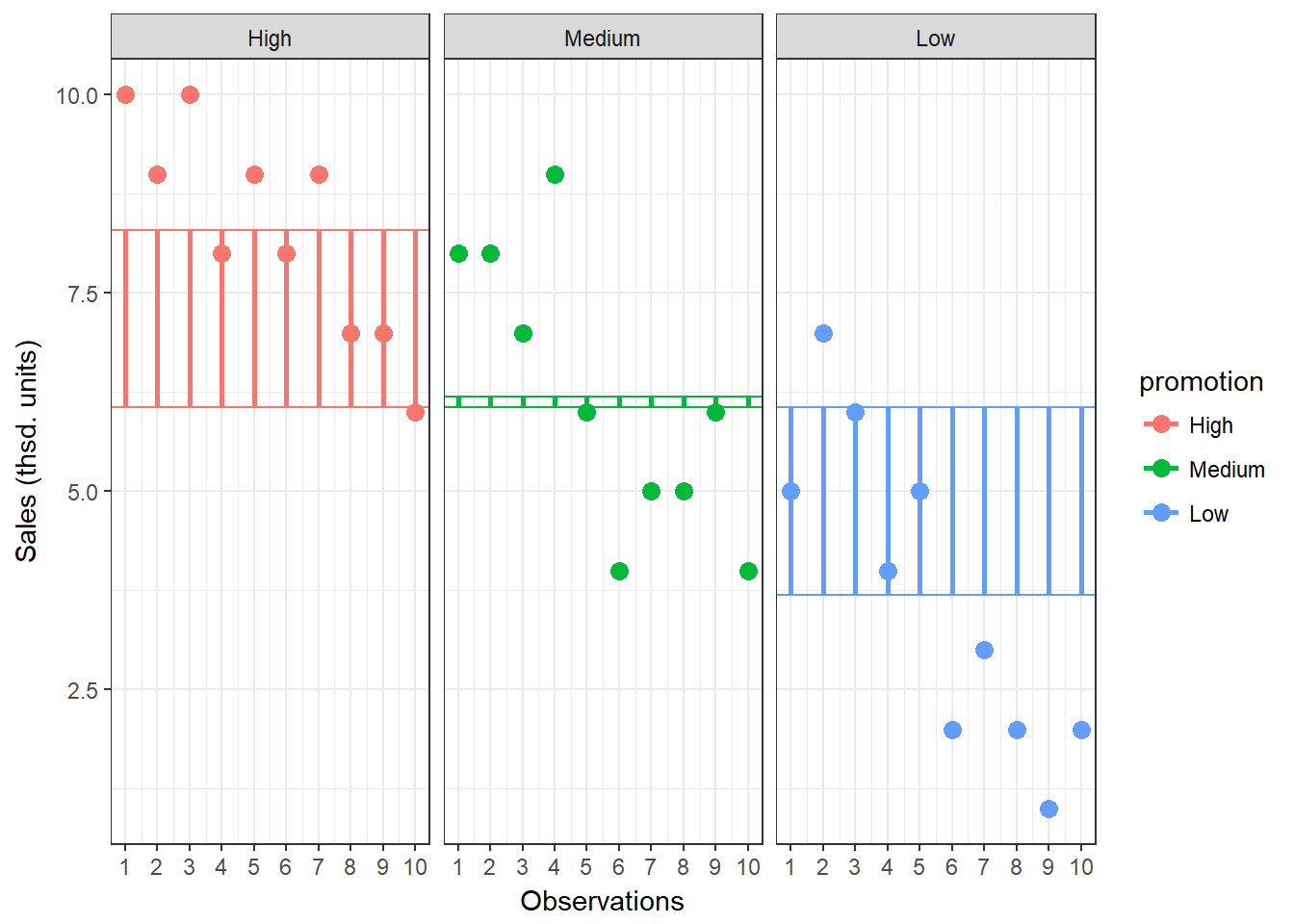

## [1] 3.7To see how each variance component can be derived, let’s look at the data again. The following graph shows the individual observations by experimental group:

Figure 7.3: Sum of Squares

7.2.1 Total sum of squares

To compute the total variation in the data, we consider the difference between each observation and the grand mean. The grand mean is the mean over all observations in the dataset. The vertical lines in the following plot measure how far each observation is away from the grand mean:

Figure 7.4: Total Sum of Squares

The formal representation of the total sum of squares (SST) is:

\[\begin{equation} \begin{split} SS_T= \sum_{i=1}^{N} (Y_i-\overline{Y})^2 \end{split} \tag{7.2} \end{equation}\]This means that we need to subtract the grand mean from each individual data point, square the difference, and sum up over all the squared differences. Thus, in our example, the total sum of squares can be calculated as:

\[ \begin{align} SS_T =&(10−6.067)^2 + (9−6.067)^2 + … + (7−6.067)^2\\ &+(8−6.067)^2 + (8−6.067)^2 + … + (4−6.067)^2\\ &+(5−6.067)^2 + (7−6.067)^2 + … + (2−6.067)^2\\ =& 185.867 \end{align} \]

You could also compute this in R using:

SST <- sum((online_store_promo$Sales - mean(online_store_promo$Sales))^2)

SST## [1] 185.8667For the subsequent analyses, it is important to understand the concept behind the degrees of freedom. Remember that in order to estimate a population value from a sample, we need to hold something in the population constant. In ANOVA, the df are generally one less than the number of values used to calculate the SS. For example, when we estimate the population mean from a sample, we assume that the sample mean is equal to the population mean. Then, in order to estimate the population mean from the sample, all but one scores are free to vary and the remaining score needs to be the value that keeps the population mean constant. In our example, we used all 30 observations to calculate the sum of square, so the total degrees of freedom (dfT) are:

\[\begin{equation} \begin{split} df_T = N-1=30-1=29 \end{split} \tag{7.3} \end{equation}\]7.2.2 Model sum of squares

Now we know that there are 185.867 units of total variation in our data. Next, we compute how much of the total variation can be explained by the differences between groups (i.e., our experimental manipulation). To compute the explained variation in the data, we consider the difference between the values predicted by our model for each observation (i.e., the group mean) and the grand mean. The group mean refers to the mean value within the experimental group. The vertical lines in the following plot measure how far the predicted value for each observation (i.e., the group mean) is away from the grand mean:

Figure 7.5: Model Sum of Squares

The formal representation of the model sum of squares (SSM) is:

\[\begin{equation} \begin{split} SS_M= \sum_{j=1}^{c} n_j(\overline{Y}_j-\overline{Y})^2 \end{split} \tag{7.4} \end{equation}\]where c denotes the number of categories (experimental groups). This means that we need to subtract the grand mean from each group mean, square the difference, and sum up over all the squared differences. Thus, in our example, the model sum of squares can be calculated as:

\[ \begin{align} SS_M &= 10*(8.3−6.067)^2 + 10*(6.2−6.067)^2 + 10*(3.7−6.067)^2 \\ &= 106.067 \end{align} \]

You could also compute this manually in R using:

SSM <- sum(10 * (by(online_store_promo$Sales, online_store_promo$Promotion,

mean) - mean(online_store_promo$Sales))^2)

SSM## [1] 106.0667In this case, we used the three group means to calculate the sum of squares, so the model degrees of freedom (dfM) are:

\[\begin{equation} \begin{split} df_M= c-1=3-1=2 \end{split} \tag{7.5} \end{equation}\]7.2.3 Residual sum of squares

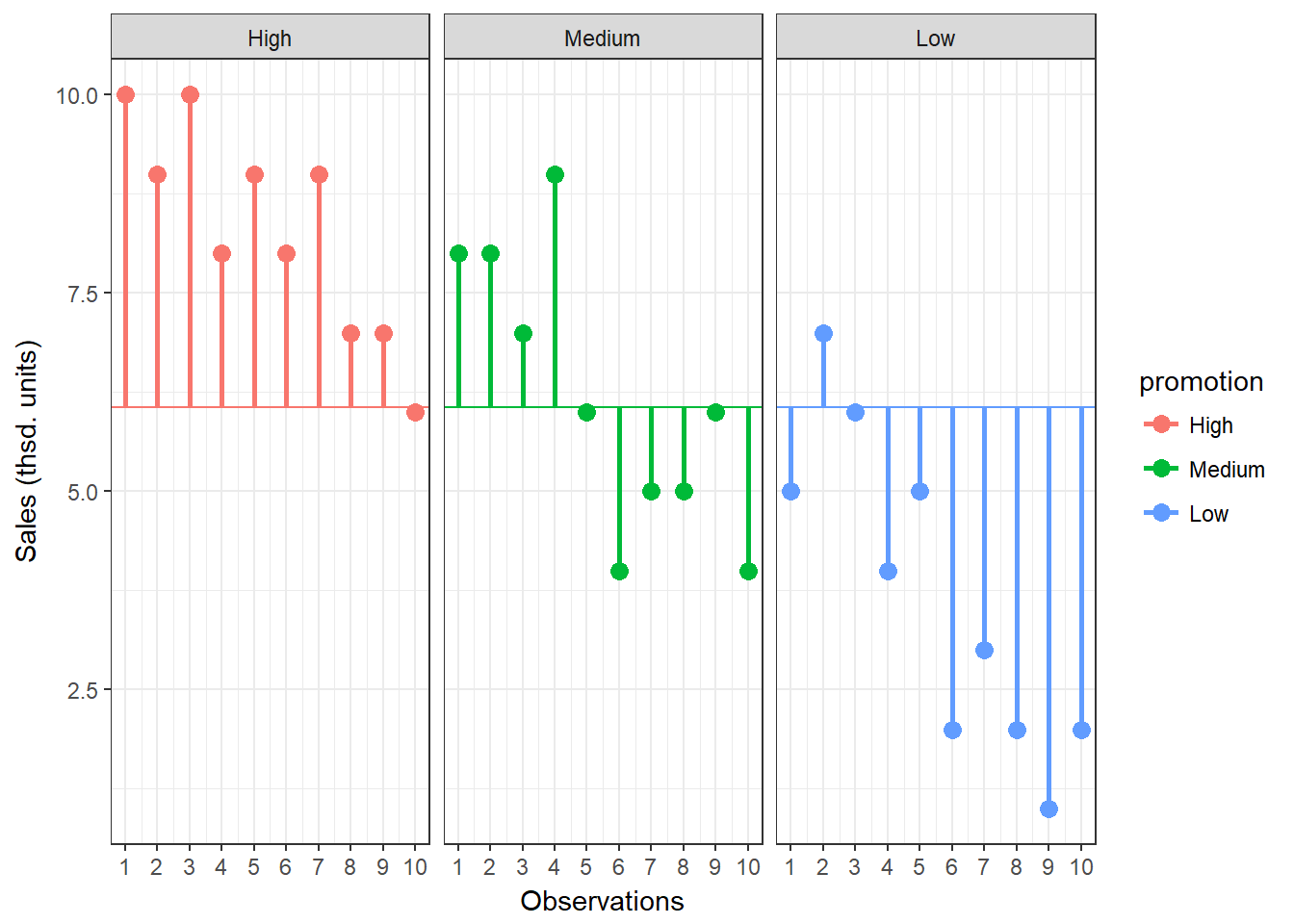

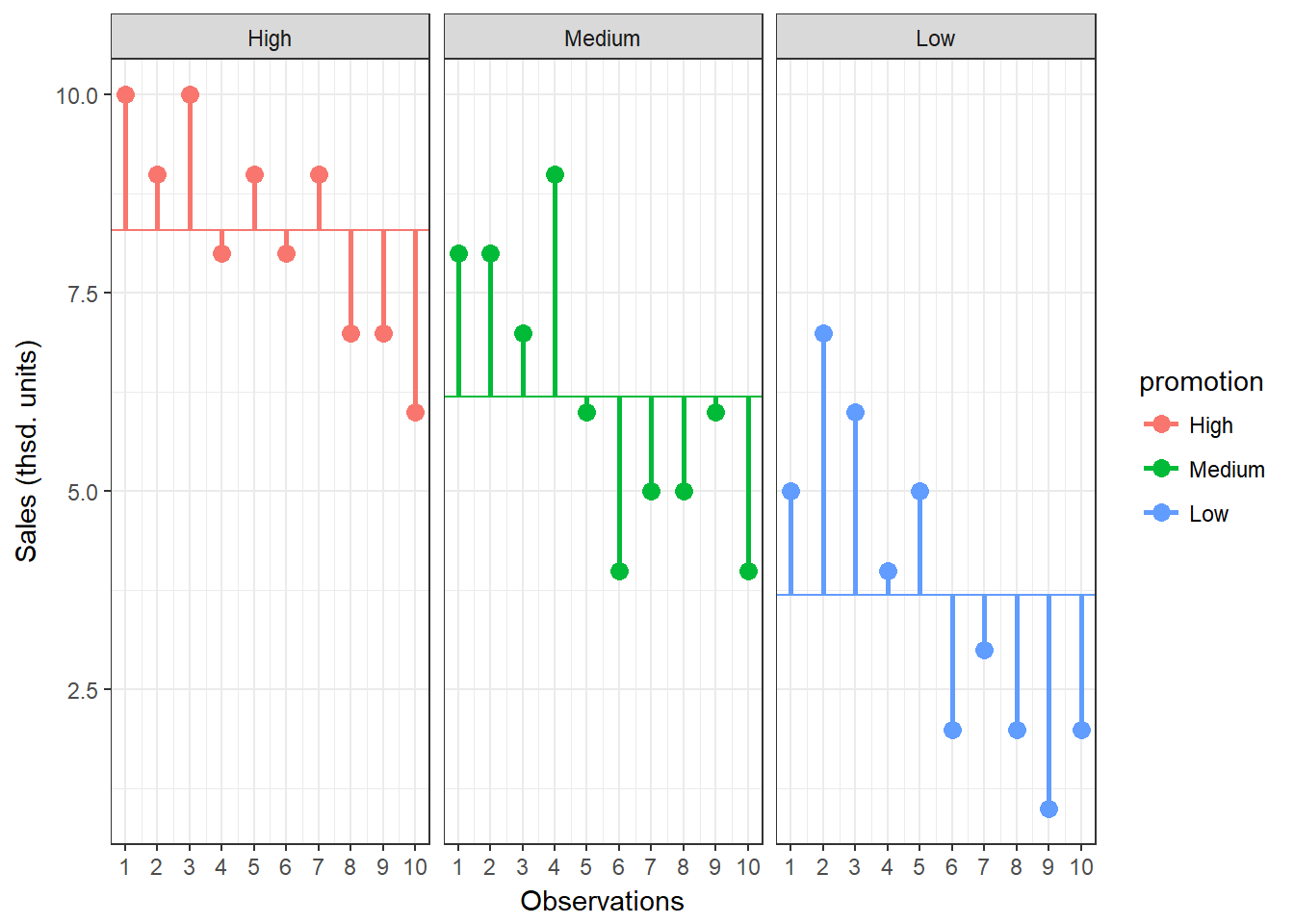

Lastly, we calculate the amount of variation that cannot be explained by our model. In ANOVA, this is the sum of squared distances between what the model predicts for each data point (i.e., the group means) and the observed values. In other words, this refers to the amount of variation that is caused by extraneous factors, such as differences between product characteristics of the products in the different experimental groups. The vertical lines in the following plot measure how far each observation is away from the group mean:

Figure 7.6: Residual Sum of Squares

The formal representation of the residual sum of squares (SSR) is:

\[\begin{equation} \begin{split} SS_R= \sum_{j=1}^{c} \sum_{i=1}^{n} ({Y}_{ij}-\overline{Y}_{j})^2 \end{split} \tag{7.6} \end{equation}\]This means that we need to subtract the group mean from each individual obseration, square the difference, and sum up over all the squared differences. Thus, in our example, the model sum of squares can be calculated as:

\[ \begin{align} SS_R =& (10−8.3)^2 + (9−8.3)^2 + … + (6−8.3)^2 \\ &+(8−6.2)^2 + (8−6.2)^2 + … + (4−6.2)^2 \\ &+ (5−3.7)^2 + (7−3.7)^2 + … + (2−3.7)^2 \\ =& 79.8 \end{align} \]

You could also compute this in R using:

SSR <- sum((online_store_promo$Sales - rep(by(online_store_promo$Sales,

online_store_promo$Promotion, mean), each = 10))^2)

SSR## [1] 79.8In this case, we used the 10 values for each of the SS for each group, so the residual degrees of freedom (dfR) are:

\[\begin{equation} \begin{split} df_R= (n_1-1)+(n_2-1)+(n_3-1) \\ =(10-1)+(10-1)+(10-1)=27 \end{split} \tag{7.7} \end{equation}\]7.2.4 Effect strength

Once you have computed the different sum of squares, you can investigate the effect strength. Eta2 is a measure of the variation in Y that is explained by X:

\[\begin{equation} \begin{split} \eta^2= \frac{SS_M}{SS_T}=\frac{106.067}{185.876}=0.571 \end{split} \tag{7.8} \end{equation}\]To compute this in R:

eta <- SSM/SST

eta## [1] 0.57066The statistic can only take values between 0 and 1. It is equal to 0 when all the category means are equal, indicating that X has no effect on Y. In contrast, it has a value of 1 when there is no variability within each category of X but there is some variability between categories.

7.2.5 Test of significance

How can we determine whether the effect of X on Y is significant?

- First, we calculate the fit of the most basic model (i.e., the grand mean)

- Then, we calculate the fit of the “best” model (i.e., the group means)

- A good model should fit the data significantly better than the basic model

- The F-statistic or F-ratio compares the amount of systematic variance in the data to the amount of unsystematic variance

The F-statistic uses the ratio of mean square related to X (explained variation) and the mean square related to the error (unexplained variation):

\(\frac{SS_M}{SS_R}\)

However, since these are summed values, their magnitude is influenced by the number of scores that were summed. For example, to calculate SSM we only used the sum of 3 values (the group means), while we used 30 and 27 values to calculate SST and SSR, respectively. Thus, we calculate the average sum of squares (“mean square”) to compare the average amount of systematic vs. unsystematic variation by deviding the SS values by the degrees of freedom associated with the respective statistic.

Mean square due to X:

\[\begin{equation} \begin{split} MS_M= \frac{SS_M}{df_M}=\frac{SS_M}{c-1}=\frac{106.067}{(3-1)} \end{split} \tag{7.9} \end{equation}\]Mean square due to error:

\[\begin{equation} \begin{split} MS_R= \frac{SS_R}{df_R}=\frac{SS_R}{N-c}=\frac{79.8}{(30-3)} \end{split} \tag{7.10} \end{equation}\]Now, we compare the amount of variability explained by the model (experiment), to the error in the model (variation due to extraneous variables). If the model explains more variability than it can’t explain, then the experimental manipulation has had a significant effect on the outcome (DV). The F-radio can be derived as follows:

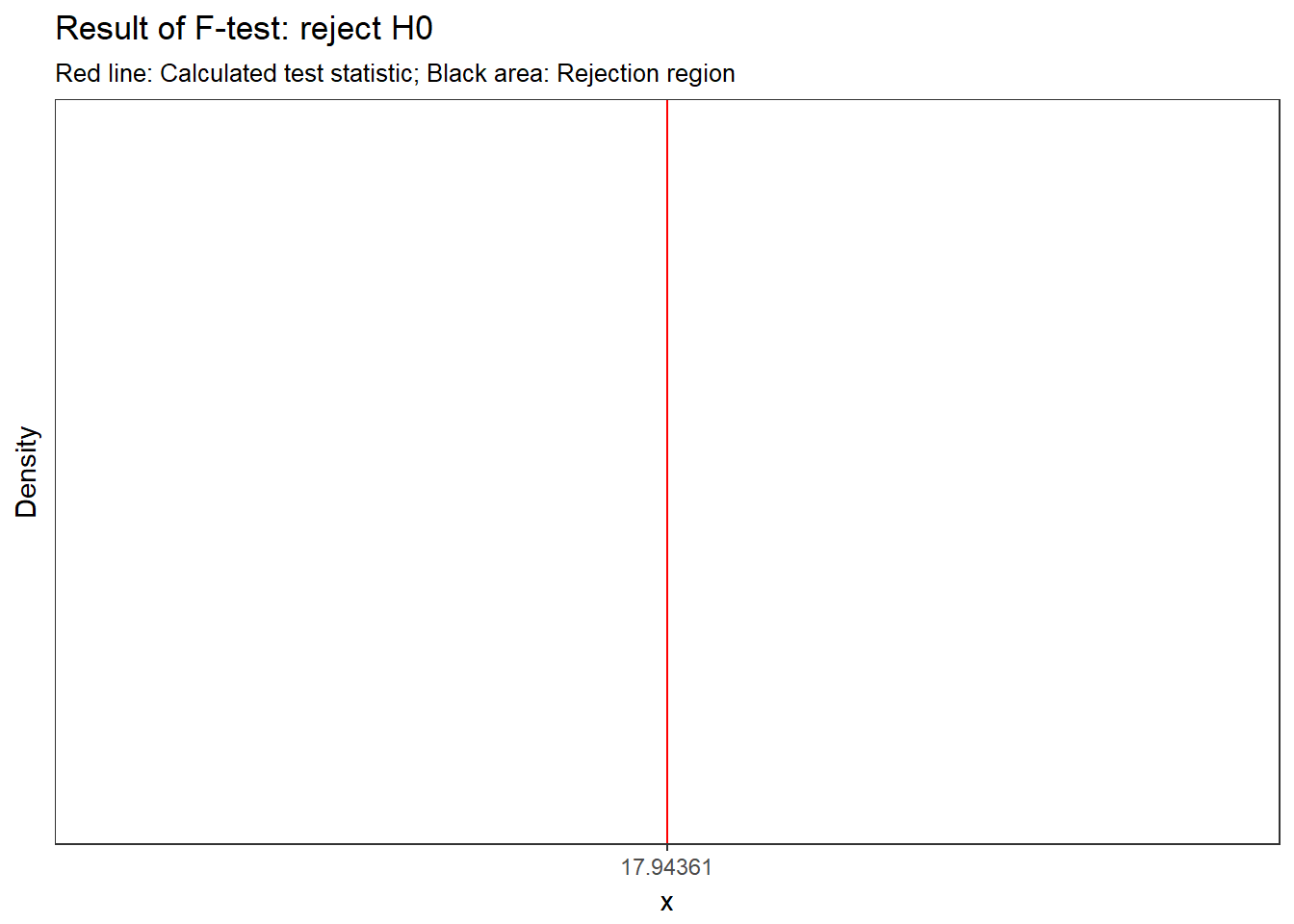

\[\begin{equation} \begin{split} F= \frac{MS_M}{MS_R}=\frac{SS_R}{N-c}=\frac{\frac{106.067}{(3-1)}}{\frac{79.8}{(30-3)}}=17.944 \end{split} \tag{7.11} \end{equation}\]You can easily compute this in R:

f_ratio <- (SSM/2)/(SSR/27)

f_ratio## [1] 17.94361This statistic follows the F distribution with (m = c – 1) and (n = N – c) degrees of freedom. This means that, like the \(\chi^2\) distribution, the shape of the F-distribution depends on the degrees of freedom. In this case, the shape depends on the degrees of freedom associated with the numerator and denominator used to compute the F-ratio. The following figure shows the shape of the F-distribution for different degrees of freedom:

Figure 7.7: The F distribution

The outcome of the test is one of the following:

- If the null hypothesis of equal category means is not rejected, then the independent variable does not have a significant effect on the dependent variable

- If the null hypothesis is rejected, then the effect of the independent variable is significant

For 2 and 27 degrees of freedom, the critical value of F is 3.35 for α=0.05. As usual, you can either look up these values in a table or use the appropriate function in R:

f_crit <- qf(0.95, df1 = 2, df2 = 27) #critical value

f_crit## [1] 3.354131f_ratio > f_crit #test if calculated test statistic is larger than critical value## [1] TRUEThe output tells us that the calculated test statistic exeeds the critical value. We can also show the test result visually:

Figure 7.8: Visual depiction of the test result

Thus, we conclude that because FCAL = 17.944 > FCR = 3.35, H0 is rejected!

Interpretation: one or more of the differences between means are statistically significant.

Reporting: There was a significant effect of promotion on sales levels, F(2,27) = 17.94, p < 0.05, η = 0.571.

Remember: This doesn’t tell us where the differences between groups lie. To find out which group means exactly differ, we need to use post-hoc procedures (see below).

You don’t have to compute these statistics manually! Luckily, there is a function for ANOVA in R, which does the above calculations for you as we will see in the next section.

7.3 One-way ANOVA

7.3.1 Basic ANOVA

As already indicated, one-way ANOVA is used when there is only one categorical variable (factor). Before conducting ANOVA, you need to check if the assumptions of the test are fulfilled. The assumptions of ANOVA are discussed in the following sections.

Independence of observations

The observations in the groups should be independent. Because we randomly assigned the products to the experimental conditions, this assumption can be assumed to be met.

Distributional assumptions

ANOVA is relatively immune to violations to the normality assumption when sample sizes are large due to the Central Limit Theorem. However, if your sample is small (i.e., n < 30 per group) you may nevertheless want to check the normality of your data, e.g., by using the Shapiro-Wilk test or QQ-Plot. In our example, we only have 10 observations per group, which means that we cannot rely on the Central Limit Theorem and we should test the normality of our data. This can be done using the Shapiro-Wilk Test, which has the Null Hyposesis that the data is normally distributed. Hence, an insignificant test results means that the data can be assumed to be approximately normally distributed:

shapiro.test(online_store_promo[online_store_promo$Promotion ==

"low", ]$Sales)##

## Shapiro-Wilk normality test

##

## data: online_store_promo[online_store_promo$Promotion == "low", ]$Sales

## W = 0.93497, p-value = 0.4985shapiro.test(online_store_promo[online_store_promo$Promotion ==

"medium", ]$Sales)##

## Shapiro-Wilk normality test

##

## data: online_store_promo[online_store_promo$Promotion == "medium", ]$Sales

## W = 0.93247, p-value = 0.4726shapiro.test(online_store_promo[online_store_promo$Promotion ==

"high", ]$Sales)##

## Shapiro-Wilk normality test

##

## data: online_store_promo[online_store_promo$Promotion == "high", ]$Sales

## W = 0.93185, p-value = 0.4664Since the test result is insignificant for all groups, we can conclude that the data approximately follow a normal distribution.

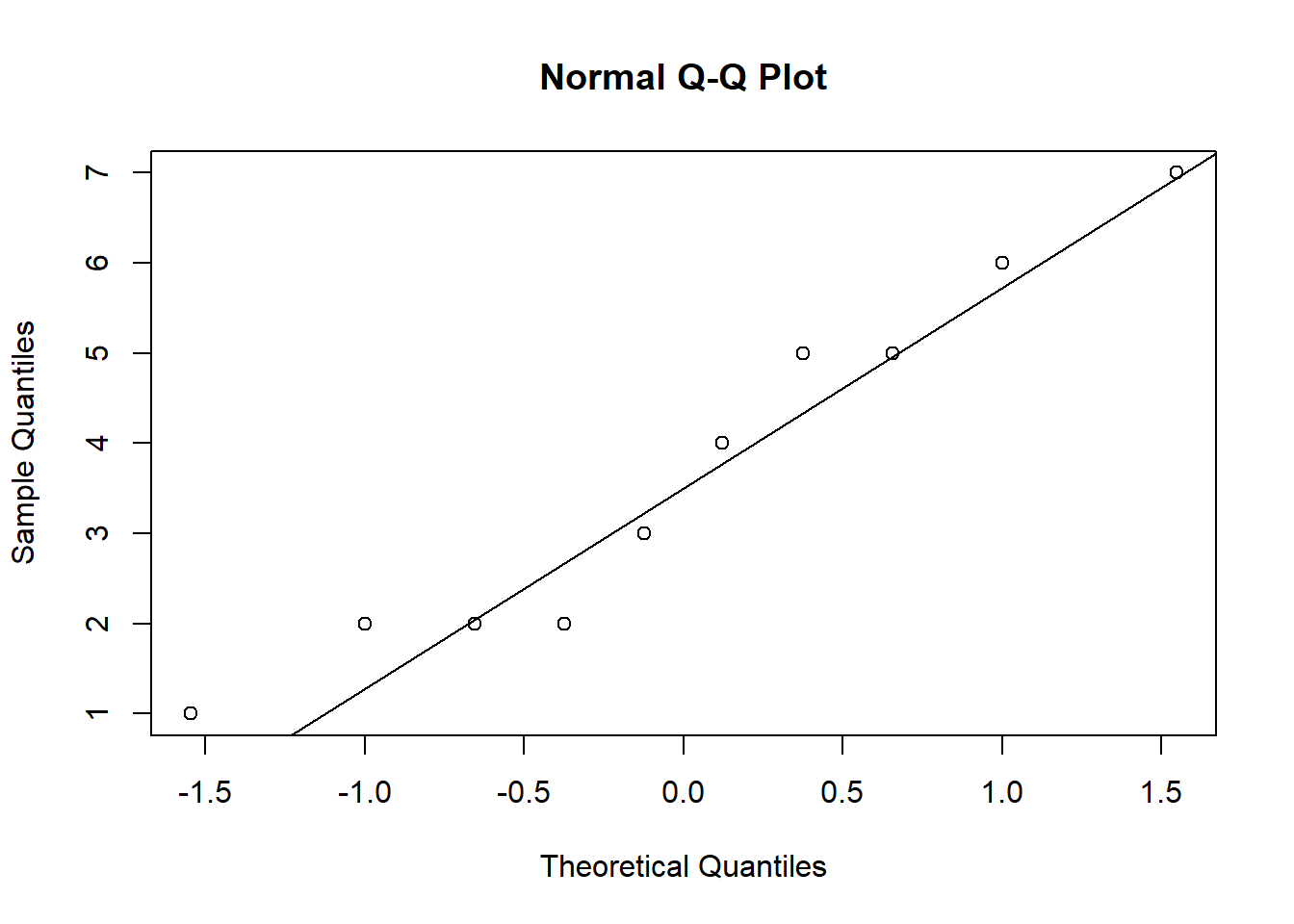

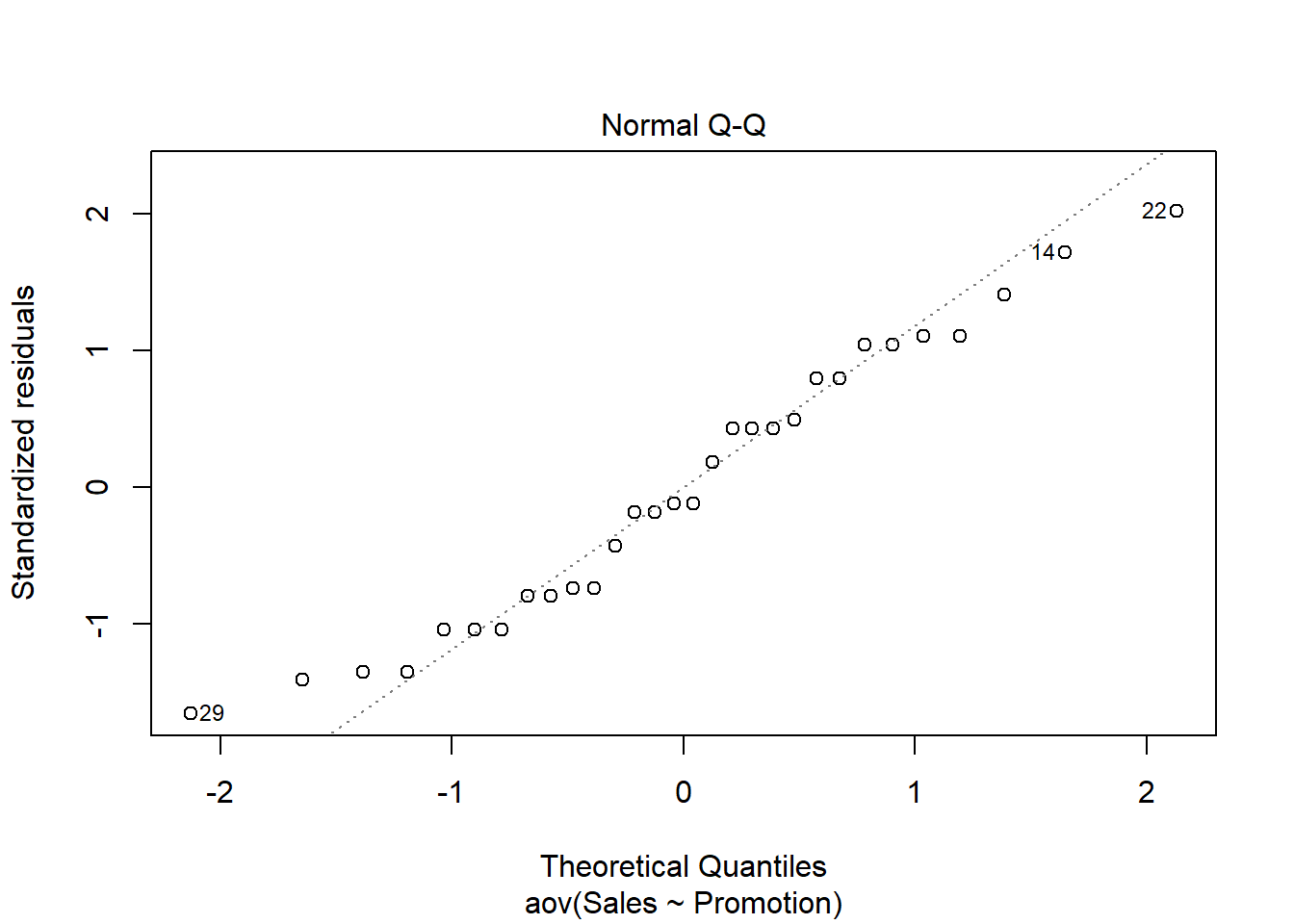

We could also test the distributional assumptions visually using a Q-Q plot (i.e., quantile-quantile plot). This plot can be used to assess if a set of data plausibly came from some theoretical distribution such as the Normal distribution. Since this is just a visual check, it is somewhat subjective. But it may help us to judge if our assumption is plausible, and if not, which data points contribute to the violation. A Q-Q plot is a scatterplot created by plotting two sets of quantiles against one another. If both sets of quantiles came from the same distribution, we should see the points forming a line that’s roughly straight. In other words, Q-Q plots take your sample data, sort it in ascending order, and then plot them versus quantiles calculated from a theoretical distribution. Quantiles are often referred to as “percentiles” and refer to the points in your data below which a certain proportion of your data fall. Recall, for example, the standard Normal distribution with a mean of 0 and a standard deviation of 1. Since the 50th percentile (or 0.5 quantile) is 0, half the data lie below 0. The 95th percentile (or 0.95 quantile), is about 1.64, which means that 95 percent of the data lie below 1.64. The 97.5th quantile is about 1.96, which means that 97.5% of the data lie below 1.96. In the Q-Q plot, the number of quantiles is selected to match the size of your sample data.

To create the Q-Q plot for the normal distribution, you may use the qqnorm() function, which takes the data to be tested as an argument. Using the qqline() function subsequently on the data creates the line on which the data points should fall based on the theoretical quantiles. If the individual data points deviate a lot from this line, it means that the data is not likely to follow a normal distribution.

qqnorm(online_store_promo[online_store_promo$Promotion ==

"low", ]$Sales)

qqline(online_store_promo[online_store_promo$Promotion ==

"low", ]$Sales)

Figure 7.9: Q-Q plot 1

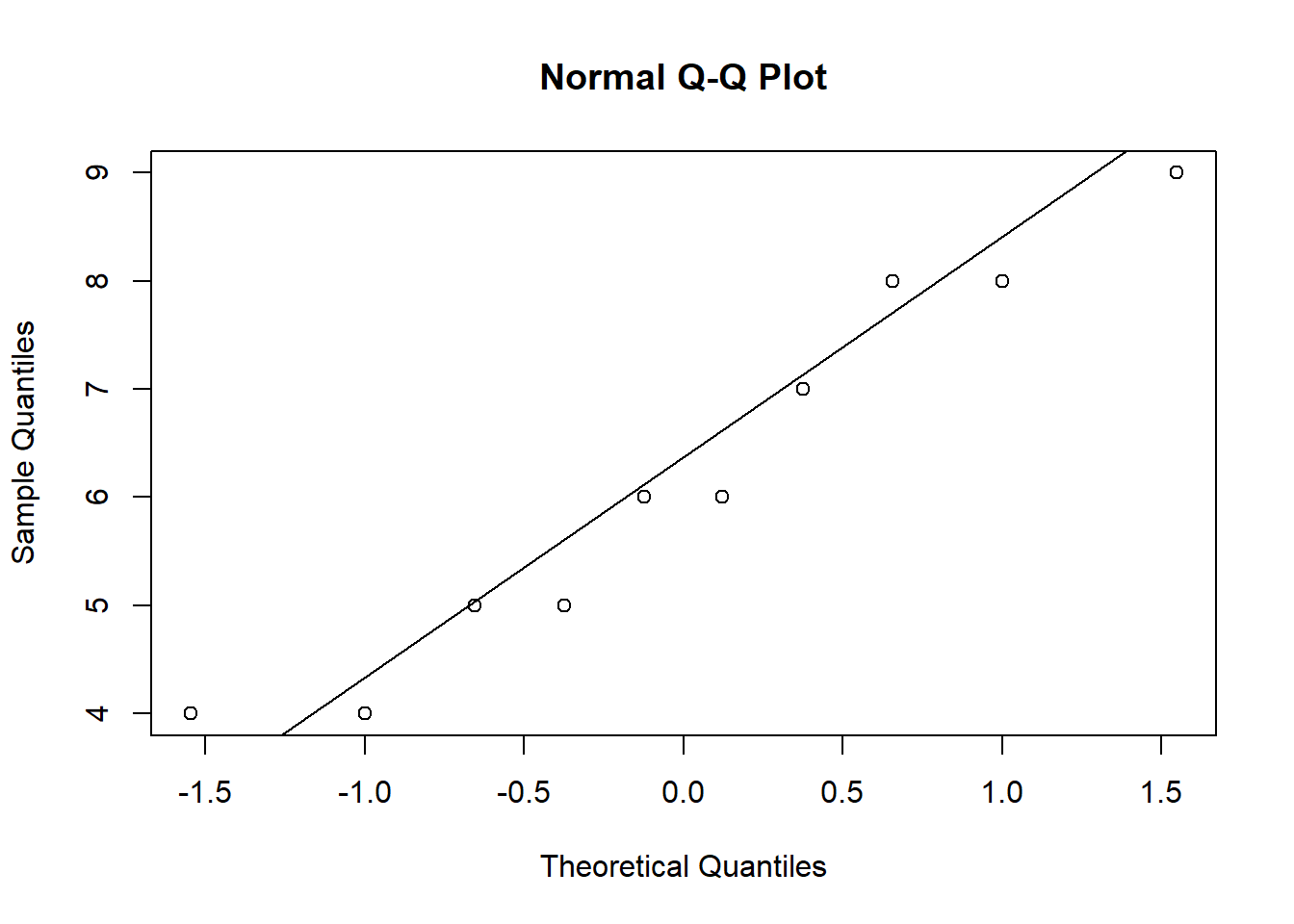

qqnorm(online_store_promo[online_store_promo$Promotion ==

"medium", ]$Sales)

qqline(online_store_promo[online_store_promo$Promotion ==

"medium", ]$Sales)

Figure 7.9: Q-Q plot 2

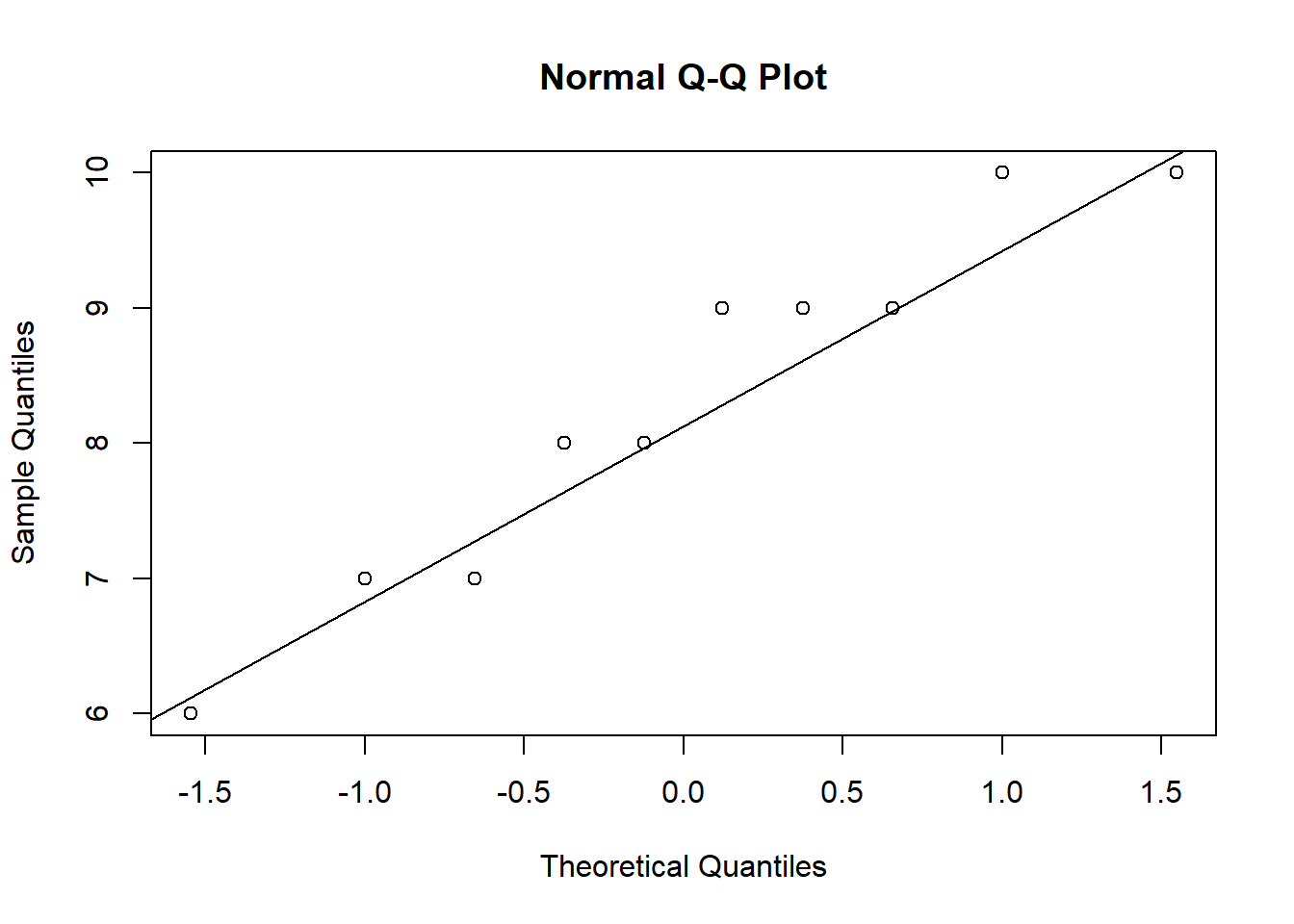

qqnorm(online_store_promo[online_store_promo$Promotion ==

"high", ]$Sales)

qqline(online_store_promo[online_store_promo$Promotion ==

"high", ]$Sales)

Figure 7.9: Q-Q plot 3

The Q-Q plots suggest an approximately Normal distribution. If the assumption had been violated, you might consider transforming your data or resort to a non-parametric test.

Homogeneity of variance

You can test the homogeneity of variances in R using Levene’s test:

library(car)

leveneTest(Sales ~ Promotion, data = online_store_promo,

center = mean)## Levene's Test for Homogeneity of Variance (center = mean)

## Df F value Pr(>F)

## group 2 1.3532 0.2754

## 27The null hypothesis of the test is that the group variances are equal. Thus, if the test result is significant it means that the variances are not equal. If we cannot reject the null hypothesis (i.e., the group variances are not significantly different), we can proceed with the ANOVA as follows:

aov <- aov(Sales ~ Promotion, data = online_store_promo)

summary(aov)## Df Sum Sq Mean Sq F value Pr(>F)

## Promotion 2 106.1 53.03 17.94 0.000011 ***

## Residuals 27 79.8 2.96

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1You can see that the p-value is smaller than 0.05. This means that, if there really was no difference between the population means (i.e., the Null hyposesis was true), the probability of the observed differences (or larger differences) is less than 5%.

To compute η2 from the output, we can extract the relevant sum of squares as follows

summary(aov)[[1]]$"Sum Sq"[1]/(summary(aov)[[1]]$"Sum Sq"[1] +

summary(aov)[[1]]$"Sum Sq"[2])## [1] 0.57066You can see that the results match the results from our manual computation above.

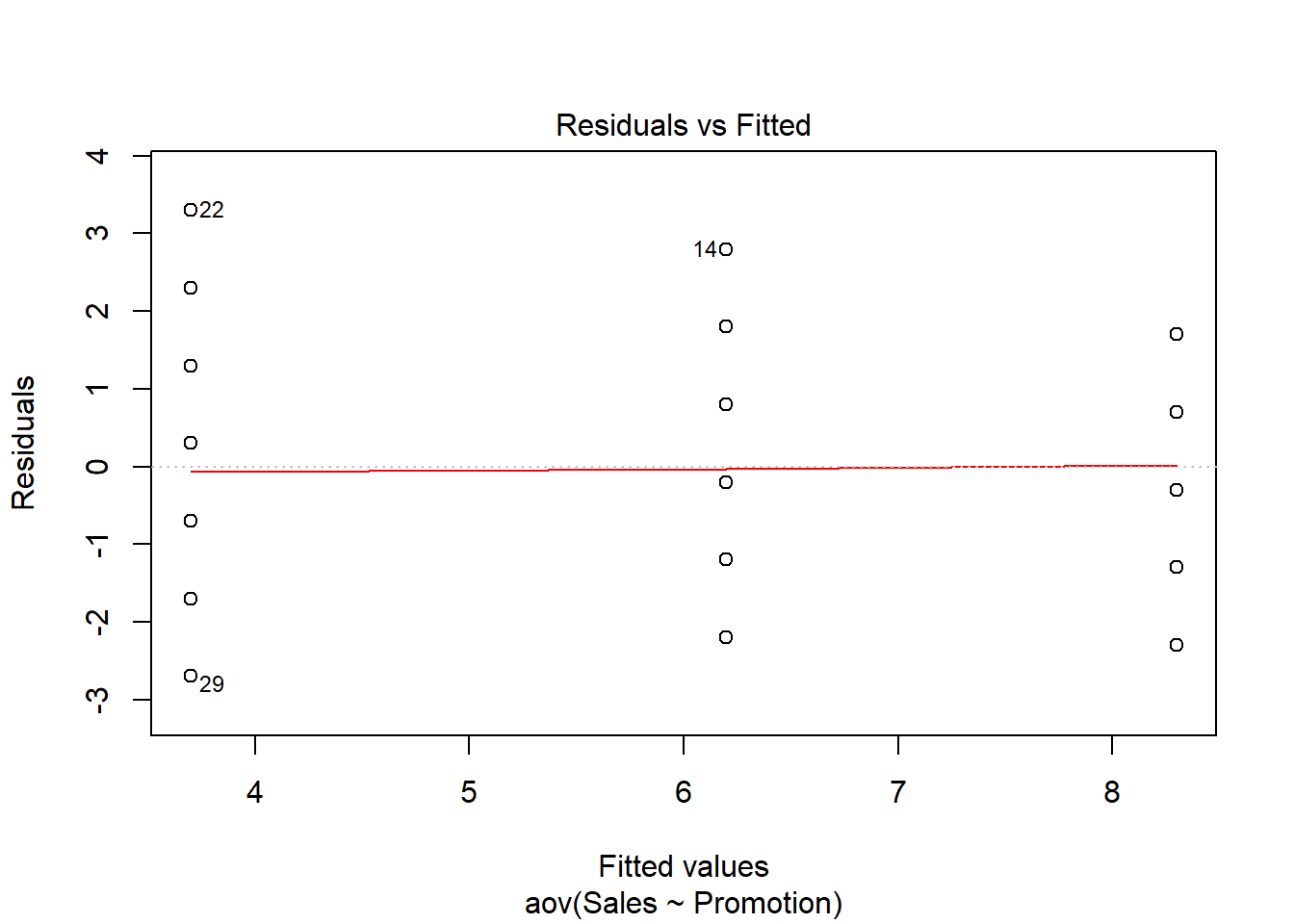

The aov() function also automatically generates some plots that you can use to judge if the model assumptions are met. We will inspect two of the plots here.

We will use the first plot to inspect if the residual variances are equal across the experimental groups:

plot(aov, 1)

Generally, the residual variance (i.e., the range of values on the y-axis) should be the same for different levels auf our independent variable. The plot shows, that there are some slight differences. Notably, the range of residuals is highest for the “low” group and lowest for the “high” group. However, the differences are not that large and since the Levene’s test could not reject the Null of equal variances, we conclude that the variances are similar enough in this case.

The second plot can be used to test the assumption that the residuals are approximately normally distributed. We use a Q-Q plot to test this assumption:

plot(aov, 2)

The plot suggests that the residuals are approximately normally distributed. We could also test this by extracting the residuals from the anova output using the resid() function and using the Shapiro-Wilk test:

shapiro.test(resid(aov))##

## Shapiro-Wilk normality test

##

## data: resid(aov)

## W = 0.96094, p-value = 0.3272Confirming the impression from the Q-Q plot, we cannot reject the Null that the residuals are approximately normally distributed.

Note that if Levene’s test would have been significant (i.e., variances are not equal), we would have needed to either resort to non-parametric tests (see below), or compute the Welch’s F-ratio instead:

oneway.test(Sales ~ Promotion, data = online_store_promo)##

## One-way analysis of means (not assuming equal variances)

##

## data: Sales and Promotion

## F = 18.09, num df = 2.00, denom df = 17.47, p-value = 0.00005541You can see that the results are fairly similar, since the variances turned out to be fairly equal across groups.

7.3.2 Post-hoc tests

Provided that significant differences were detected by the overall ANOVA you can find out which group means are different using post hoc procedures. Post hoc procedures are designed to conduct pairwise comparisons of all different combinations of the treatment groups by correcting the level of significance for each test such that the overall Type I error rate (α) across all comparisons remains at 0.05.

In other words, we rejected H0: μ1= μ2= μ3, and now we would like to test:

Test1:

\(H_0: \mu_1 = \mu_2\)

Test2:

\(H_0: \mu_1 = \mu_3\)

Test3:

\(H_0: \mu_2 = \mu_3\)

There are several post hoc procedures available to choose from. In this tutorial, we will cover Bonferroni and Tukey’s HSD (“honest significant differences”). Both tests control for familywise error. Bonferroni tends to have more power when the number of comparisons is small, whereas Tukey’ HSDs is better when testing large numbers of means.

7.3.2.1 Bonferroni

One of the most popular (and easiest) methods to correct for the familywise error rate is to conduct the individual t-tests and divide α by the number of comparisons („k“):

\[\begin{equation} \begin{split} p_{CR}= \frac{\alpha}{k} \end{split} \tag{7.12} \end{equation}\]In our example with three groups:

\(p_{CR}= \frac{0.05}{3}=0.017\)

Thus, the “corrected” critical p-value is now 0.017 instead of 0.05 (i.e., the critical t value is higher). You can implement the Bonferroni procedure in R using:

pairwise.t.test(online_store_promo$Sales, online_store_promo$Promotion,

data = online_store_promo, p.adjust.method = "bonferroni")##

## Pairwise comparisons using t tests with pooled SD

##

## data: online_store_promo$Sales and online_store_promo$Promotion

##

## high medium

## medium 0.0329 -

## low 0.0000066 0.0092

##

## P value adjustment method: bonferroniIn the output, you will get the corrected p-values for the individual tests. In our example, we can reject H0 of equal means for all three tests, since p < 0.05 for all combinations of groups.

Note the difference between the results from the post-hoc test compared to individual t-tests. For example, when we test the “medium” vs. “high” groups, the result from a t-test would be:

data_subset <- subset(online_store_promo, Promotion !=

"low")

t.test(Sales ~ Promotion, data = data_subset)##

## Welch Two Sample t-test

##

## data: Sales by Promotion

## t = 3.0137, df = 16.834, p-value = 0.007888

## alternative hypothesis: true difference in means is not equal to 0

## 95 percent confidence interval:

## 0.6287384 3.5712616

## sample estimates:

## mean in group high mean in group medium

## 8.3 6.2The p-value is lower in the t-test, reflecting the fact that the familywise error is not corrected (i.e., the test is less conservative).

7.3.2.2 Tukey’s HSD

Tukey’s HSD also compares all possible pairs of means (two-by-two combinations; i.e., like a t-test, except that it corrects for family-wise error rate).

Test statistic:

\[\begin{equation} \begin{split} HSD= q\sqrt{\frac{MS_R}{n_c}} \end{split} \tag{7.13} \end{equation}\]where:

- q = value from studentized range table (see e.g., here)

- MSR = Mean Square Error from ANOVA

- nc = number of observations per group

- Decision: Reject H0 if

\(|\overline{Y}_i-\overline{Y}_j | > HSD\) ,

The value from the studentized range table can be obtained using the qtukey() function.

q <- qtukey(0.95, nm = 3, df = 27)

q## [1] 3.506426Hence:

\(HSD= 3.506\sqrt{\frac{2.96}{10}}=1.906\)

Or, in R:

hsd <- q * sqrt(summary(aov)[[1]]$"Mean Sq"[2]/10)

hsd## [1] 1.906269Since all mean differences between groups are larger than 1.906, we can reject the null hypothsis for all individual tests, confirming the results from the Bonferroni test. To compute Tukey’s HSD, we can use the appropriate function from the multcomp package.

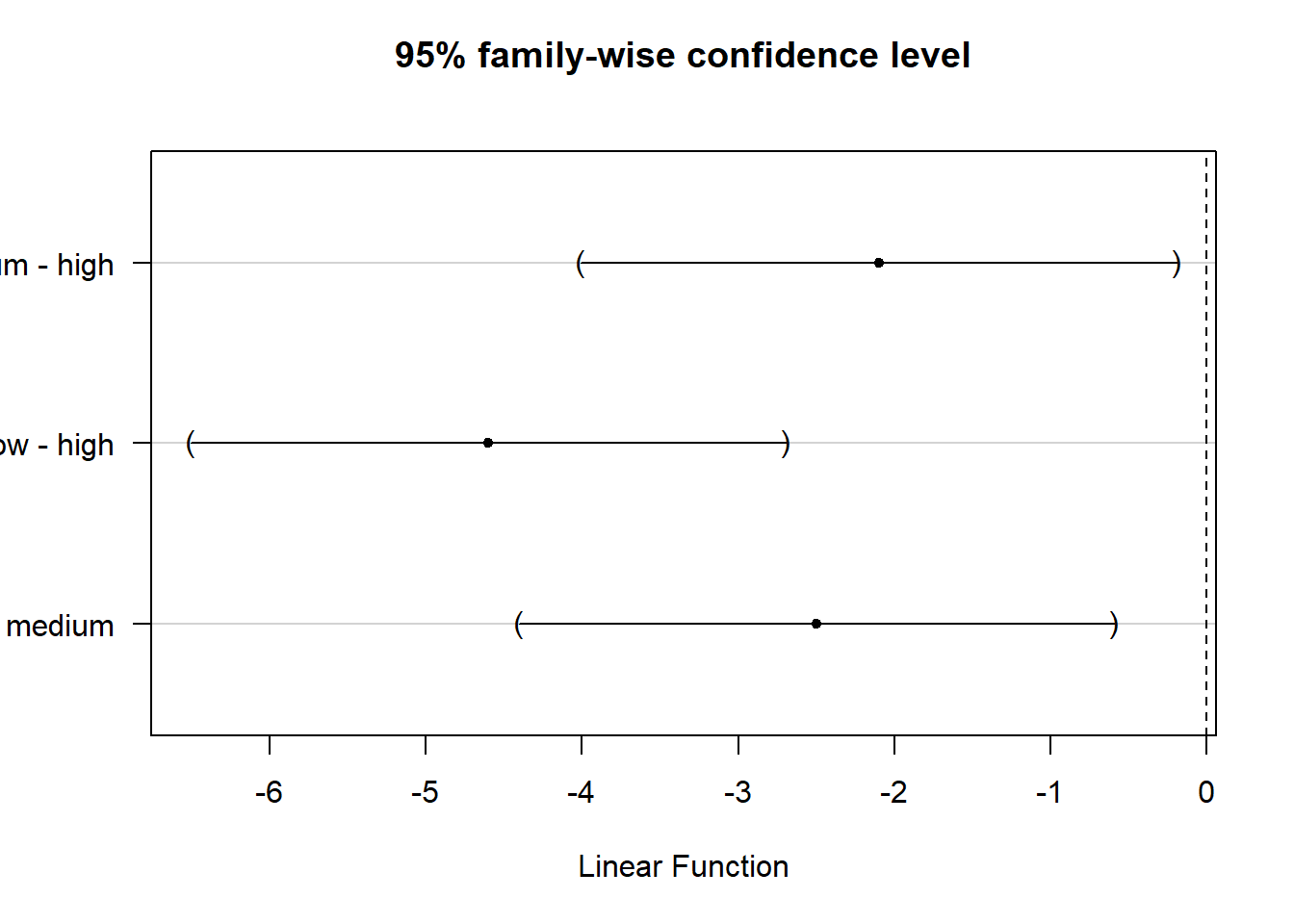

library(multcomp)

tukeys <- glht(aov, linfct = mcp(Promotion = "Tukey"))

summary(tukeys)##

## Simultaneous Tests for General Linear Hypotheses

##

## Multiple Comparisons of Means: Tukey Contrasts

##

##

## Fit: aov(formula = Sales ~ Promotion, data = online_store_promo)

##

## Linear Hypotheses:

## Estimate Std. Error t value Pr(>|t|)

## medium - high == 0 -2.1000 0.7688 -2.731 0.02850 *

## low - high == 0 -4.6000 0.7688 -5.983 < 0.001 ***

## low - medium == 0 -2.5000 0.7688 -3.252 0.00826 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

## (Adjusted p values reported -- single-step method)confint(tukeys)##

## Simultaneous Confidence Intervals

##

## Multiple Comparisons of Means: Tukey Contrasts

##

##

## Fit: aov(formula = Sales ~ Promotion, data = online_store_promo)

##

## Quantile = 2.4797

## 95% family-wise confidence level

##

##

## Linear Hypotheses:

## Estimate lwr upr

## medium - high == 0 -2.1000 -4.0065 -0.1935

## low - high == 0 -4.6000 -6.5065 -2.6935

## low - medium == 0 -2.5000 -4.4065 -0.5935We may also plot the result for the mean differences incl. their confidence intervals:

plot(tukeys)

Figure 7.10: Tukey’s HSD

You can see that the CIs do not cross zero, which means that the true difference between group means is unlikely zero.

mean1 <- mean(online_store_promo[online_store_promo$Promotion ==

"high", "Sales"]) #mean group 'high'

mean1## [1] 8.3mean2 <- mean(online_store_promo[online_store_promo$Promotion ==

"medium", "Sales"]) #mean group 'medium'

mean2## [1] 6.2mean3 <- mean(online_store_promo[online_store_promo$Promotion ==

"low", "Sales"]) #mean group 'low'

mean3## [1] 3.7# CI high vs. medium

mean_diff_high_med <- mean2 - mean1

mean_diff_high_med## [1] -2.1ci_med_high_lower <- mean_diff_high_med - hsd

ci_med_high_upper <- mean_diff_high_med + hsd

ci_med_high_lower## [1] -4.006269ci_med_high_upper## [1] -0.1937307# CI high vs.low

mean_diff_high_low <- mean3 - mean1

mean_diff_high_low## [1] -4.6ci_low_high_lower <- mean_diff_high_low - hsd

ci_low_high_upper <- mean_diff_high_low + hsd

ci_low_high_lower## [1] -6.506269ci_low_high_upper## [1] -2.693731# CI medium vs.low

mean_diff_med_low <- mean3 - mean2

mean_diff_med_low## [1] -2.5ci_low_med_lower <- mean_diff_med_low - hsd

ci_low_med_upper <- mean_diff_med_low + hsd

ci_low_med_lower## [1] -4.406269ci_low_med_upper## [1] -0.5937307Reporting of post hoc results:

The post hoc tests based on Bonferroni and Tukey’s HSD revealed that sales were significantly higher when using medium vs. low levels, high vs. medium levels, as well high vs. low levels of promotion.

The following video summarizes how to conduct a one-way ANOVA in R

7.4 N-way ANOVA

As stated above, N-way ANOVA is used when you have a metric dependent variable and two or more factors with two or more factor levels. In other words, with N-way ANOVA, you can investigate the effects of more than one factor simultaneously. In addition, you can assess interactions between the factors that occur when the effects of one factor on the dependent variable depend on the level (category) of the other factors. An experiment with two or more independent variables is also called a factorial design and N-way ANOVA is therefore also referred to as factorial ANOVA.

Let’s extend our example from above and assume that there was a second factor considered in the experiment. Besides the different levels of promotion intensity, the 30 products were also randomly assigned to two experimental groups that determined whether the product was featured in a newsletter or not. Hence, there is a second factor “newsletter” with two factor levels (i.e., “yes” and “no”):

This means that we have a 2x3 factorial design since we have one factor with 3 levels (i.e., online promotion (1) “high”, (2) “medium”, (3) “low”), and one factor with 2 levels (i.e., newsletter (1) “yes”, (2) “no”). In a next step, we create a new grouping variable that specifiess the treatment using the paste(...) function. The paste(...) function basically concatenates its arguments and separates them by the string given by sep = "".

online_store_promo$Group <- paste(online_store_promo$Promotion,

online_store_promo$Newsletter, sep = "_") #create new grouping variable

online_store_promoAs you can see, we now have six experimental groups:

- = high promotion, newsletter

- = high promotion, no newsletter

- = medium promotion, newsletter

- = medium promotion, no newsletter

- = low promotion, newsletter

- = low promotion, no newletter

In our analysis, we now focus on the comparsion of the means between the six groups. Let’s inspect the group means:

by(online_store_promo$Sales, online_store_promo$Group,

mean) #category means## online_store_promo$Group: high_no

## [1] 7.4

## --------------------------------------------------------

## online_store_promo$Group: high_yes

## [1] 9.2

## --------------------------------------------------------

## online_store_promo$Group: low_no

## [1] 2

## --------------------------------------------------------

## online_store_promo$Group: low_yes

## [1] 5.4

## --------------------------------------------------------

## online_store_promo$Group: medium_no

## [1] 4.8

## --------------------------------------------------------

## online_store_promo$Group: medium_yes

## [1] 7.6We can also plot the means for each factor individually and for both factors combined.

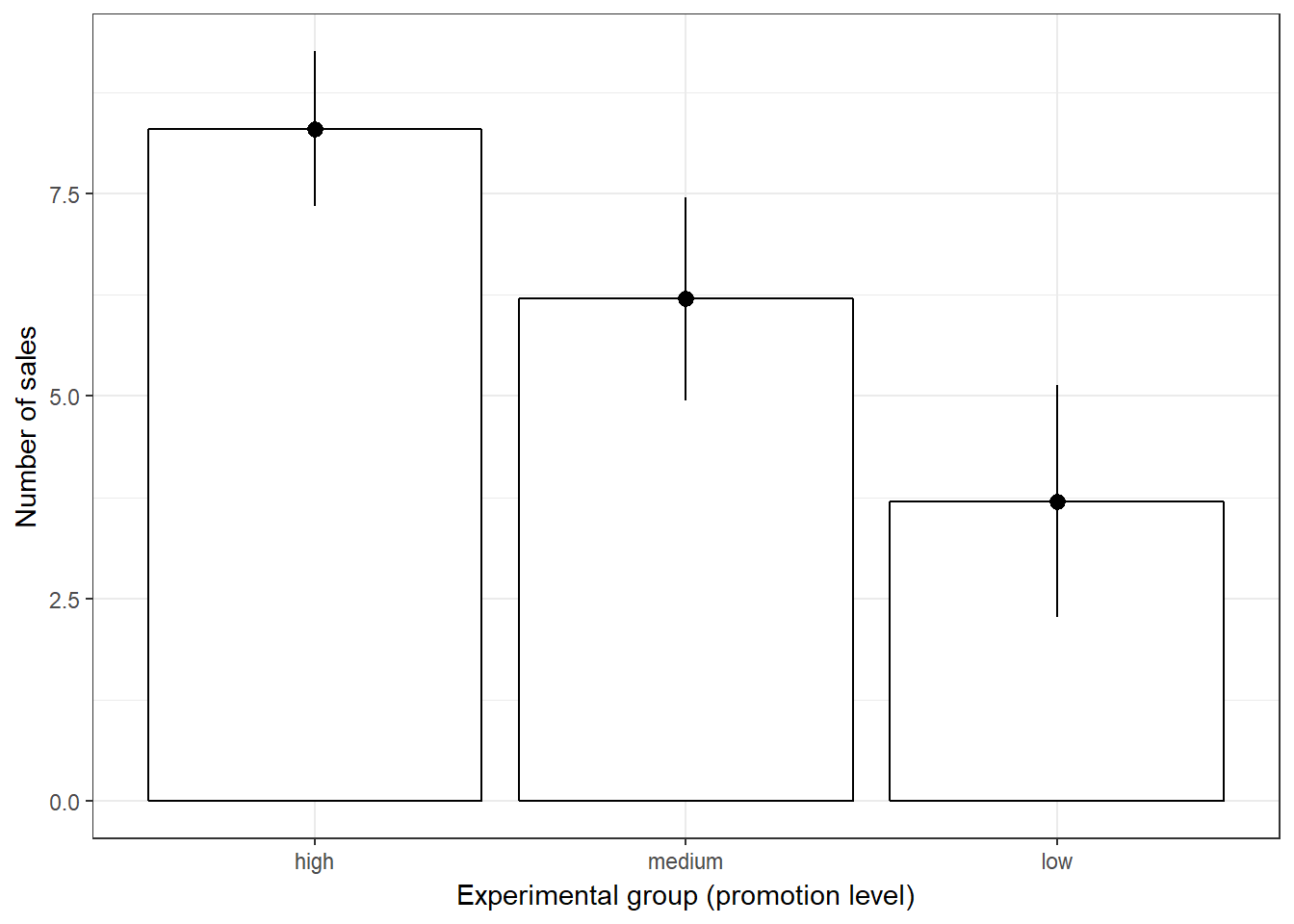

Plot means for first factor “promotion” (same as before):

#Plot of means

ggplot(online_store_promo, aes(Promotion, Sales)) +

stat_summary(fun.y = mean, geom = "bar", fill="White", colour="Black") +

stat_summary(fun.data = mean_cl_normal, geom = "pointrange") +

labs(x = "Experimental group (promotion level)", y = "Number of sales") +

theme_bw()

Figure 7.11: Plot of means (in-store promotion)

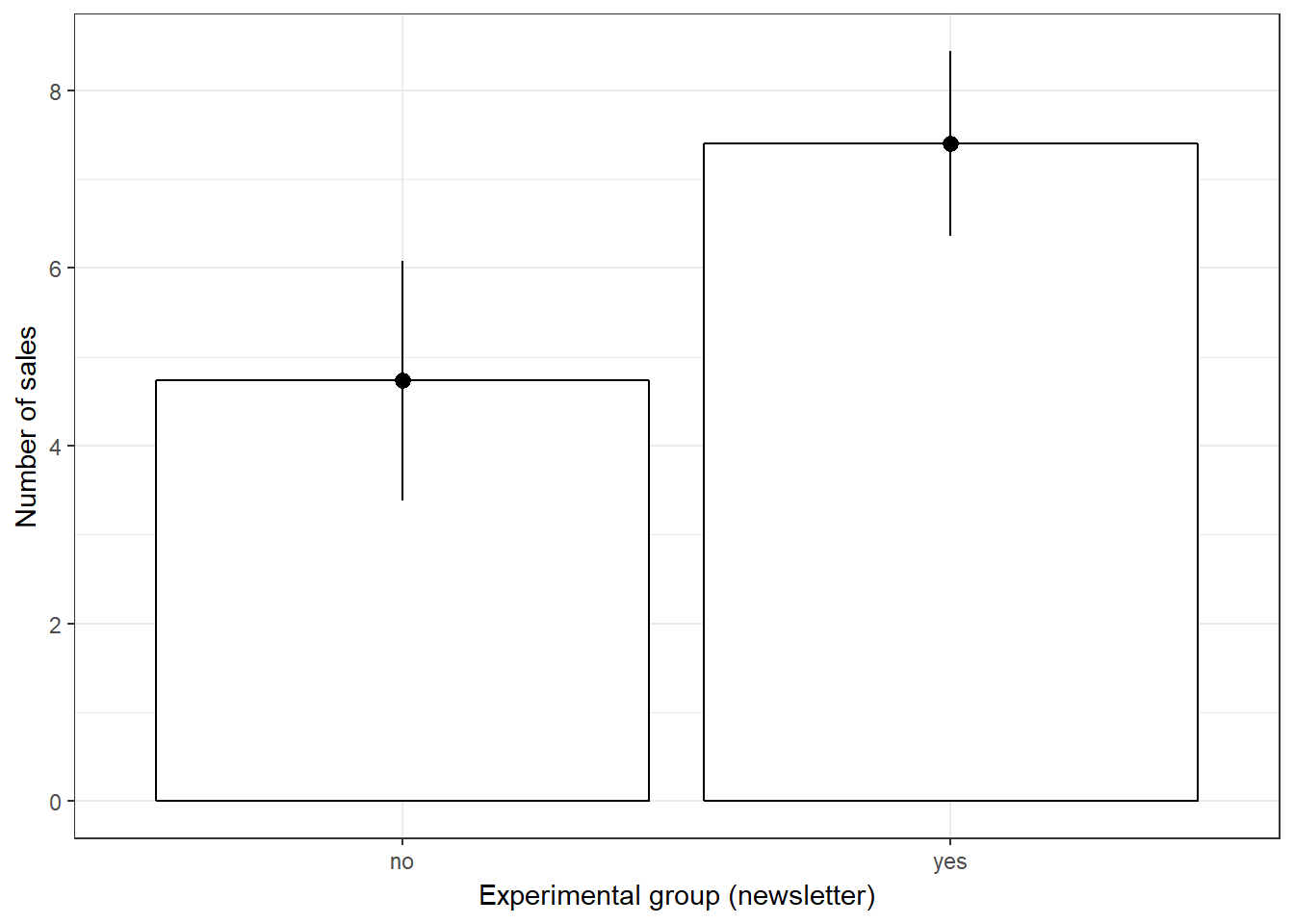

Plot means for second factor “newsletter”:

#Plot of means

ggplot(online_store_promo, aes(Newsletter, Sales)) +

stat_summary(fun.y = mean, geom = "bar", fill="White", colour="Black") +

stat_summary(fun.data = mean_cl_normal, geom = "pointrange") +

labs(x = "Experimental group (newsletter)", y = "Number of sales") +

theme_bw()

Figure 7.12: Plot of means (newsletter)

Plot means for both factors together:

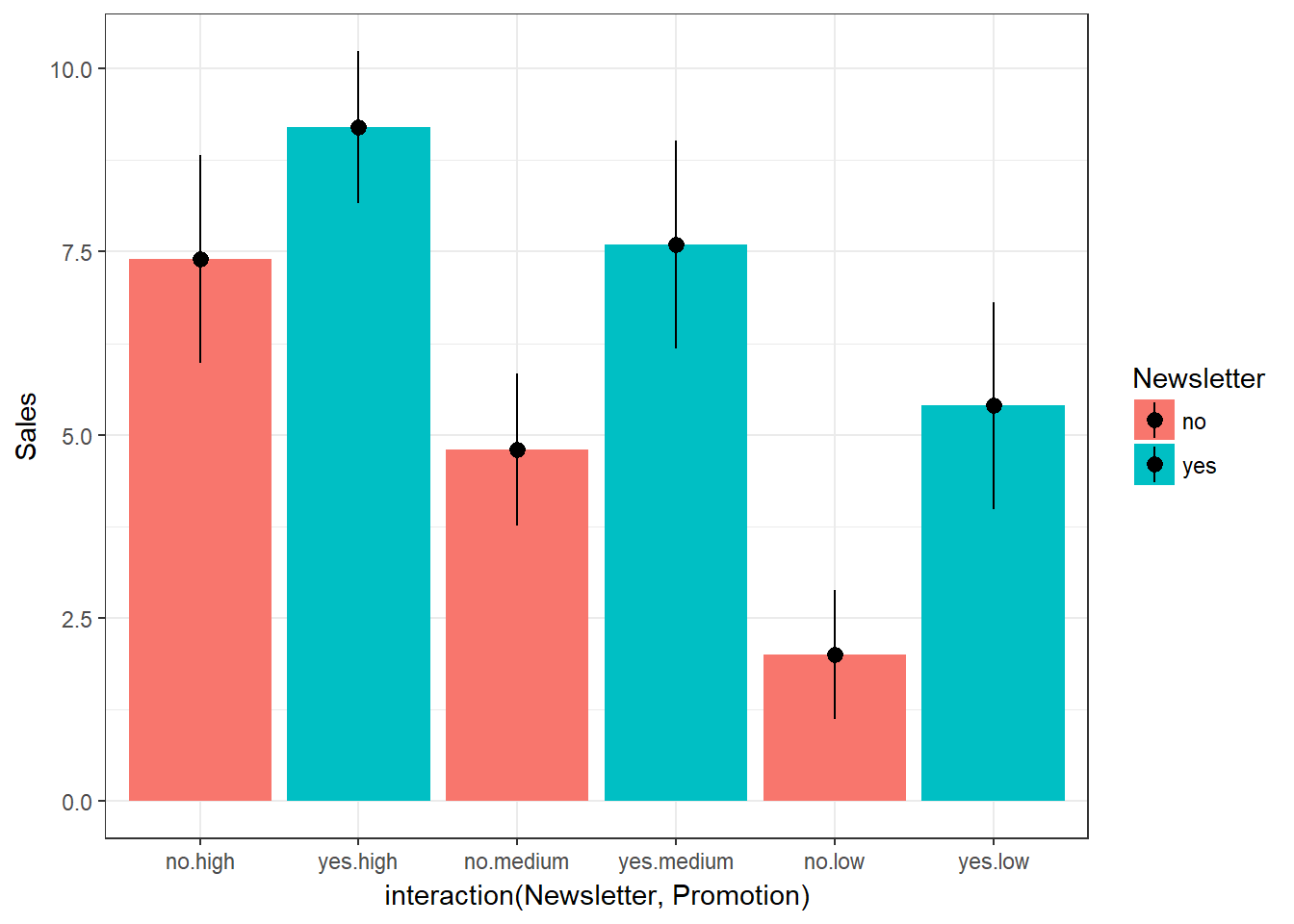

ggplot(online_store_promo, aes(x = interaction(Newsletter, Promotion), y = Sales, fill = Newsletter)) +

stat_summary(fun.y = mean, geom = "bar", position = position_dodge()) +

stat_summary(fun.data = mean_cl_normal, geom = "pointrange") +

theme_bw()

Figure 7.13: Plot of means (interaction)

So what is different compared to the one-way ANOVA? In the one-way ANOVA, we computed the sum of squares as:

\[\begin{equation} \begin{split} SS_T= SS_M+SS_R \end{split} \tag{7.1} \end{equation}\]The main difference is that in an N-way ANOVA the model sum of squares SSM consists of different components. In our case:

\[\begin{equation} \begin{split} SS_M= SS_{X_1}+SS_{X_2}+SS_{X_1X_2} \end{split} \tag{7.14} \end{equation}\]That is, we can further decompose the explained variance into the variance explained by the first factor (X1), the variance explained by the second factor (X2), and the variance explained by the interaction of these factors (X1X2). The interaction will tell us if the effect of one factor depends on the level of the second factor and vice versa. Because we now have more information available (the manipulation of the second factor), we would expect the amount of explained variance to increase relative to the amount of unexplained variance.

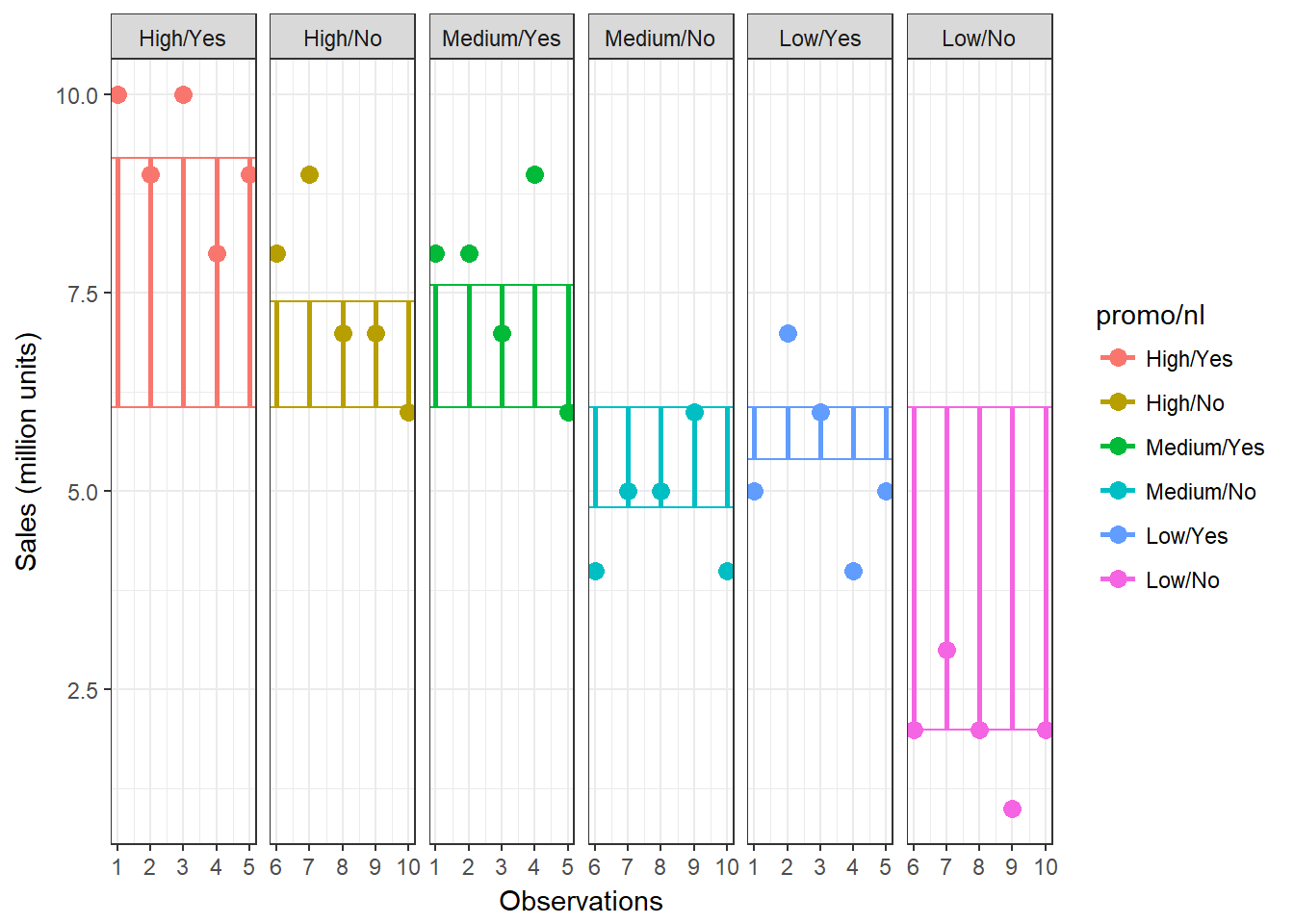

To visualize this, we can include this new information in the plot that we used before to inspect the model sum of squares:

Figure 7.14: Sum of Squares (N-way ANOVA)

You can see that our model now better represents the data using the additional information (i.e., the distance between the individual observations and the group mean has decreased). Let’s re-run the ANOVA using the additional information. To do this, we use the same commands as before and simply include the additional factor:

## Levene's Test for Homogeneity of Variance (center = mean)

## Df F value Pr(>F)

## group 5 0.689 0.6365

## 24## Df Sum Sq Mean Sq F value Pr(>F)

## Promotion 2 106.07 53.03 54.86 0.00000000112 ***

## Newsletter 1 53.33 53.33 55.17 0.00000011439 ***

## Promotion:Newsletter 2 3.27 1.63 1.69 0.206

## Residuals 24 23.20 0.97

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1The Levene’s Test indicates that the variances of the groups are not significantly different. Theoretically, you would also need to test the distributional assumptions for each group again, but we skip this step here.

The ANOVA output shows us that the two main effects are significant, while the interaction is not. This means that online promotions and newsletter features result in higher sales. However, the effect of each factor is independent of the other. Note that in the presence of significant interaction effects, it would make no sense to interpret the main effects! If this would be the case, we would only conclude that the effect of one factor depends on the other factor. If the interaction effect is insignificant (as in our case), you could also conduct post hoc tests for each individual factor. However, you only need to conduct post hoc tests for factors with more than 2 levels (i.e., not for the newsletter factor) since there is no familywise error for variables with two categories.

In an N-way ANOVA, the multiple η2 measures the strength of the joint effect of two factors (also called the overall effect). To compute the multiple η2, the revised equation is:

\[\begin{equation} \begin{split} \eta^2= \frac{SS_{X_1}+SS_{X_2}+SS_{X_1X_2}}{SS_T} \end{split} \tag{7.15} \end{equation}\]From the output, we can extract the relevant sum of squares as follows:

(summary(aov)[[1]]$"Sum Sq"[1] + summary(aov)[[1]]$"Sum Sq"[2] +

summary(aov)[[1]]$"Sum Sq"[3])/(summary(aov)[[1]]$"Sum Sq"[1] +

summary(aov)[[1]]$"Sum Sq"[2] + summary(aov)[[1]]$"Sum Sq"[3] +

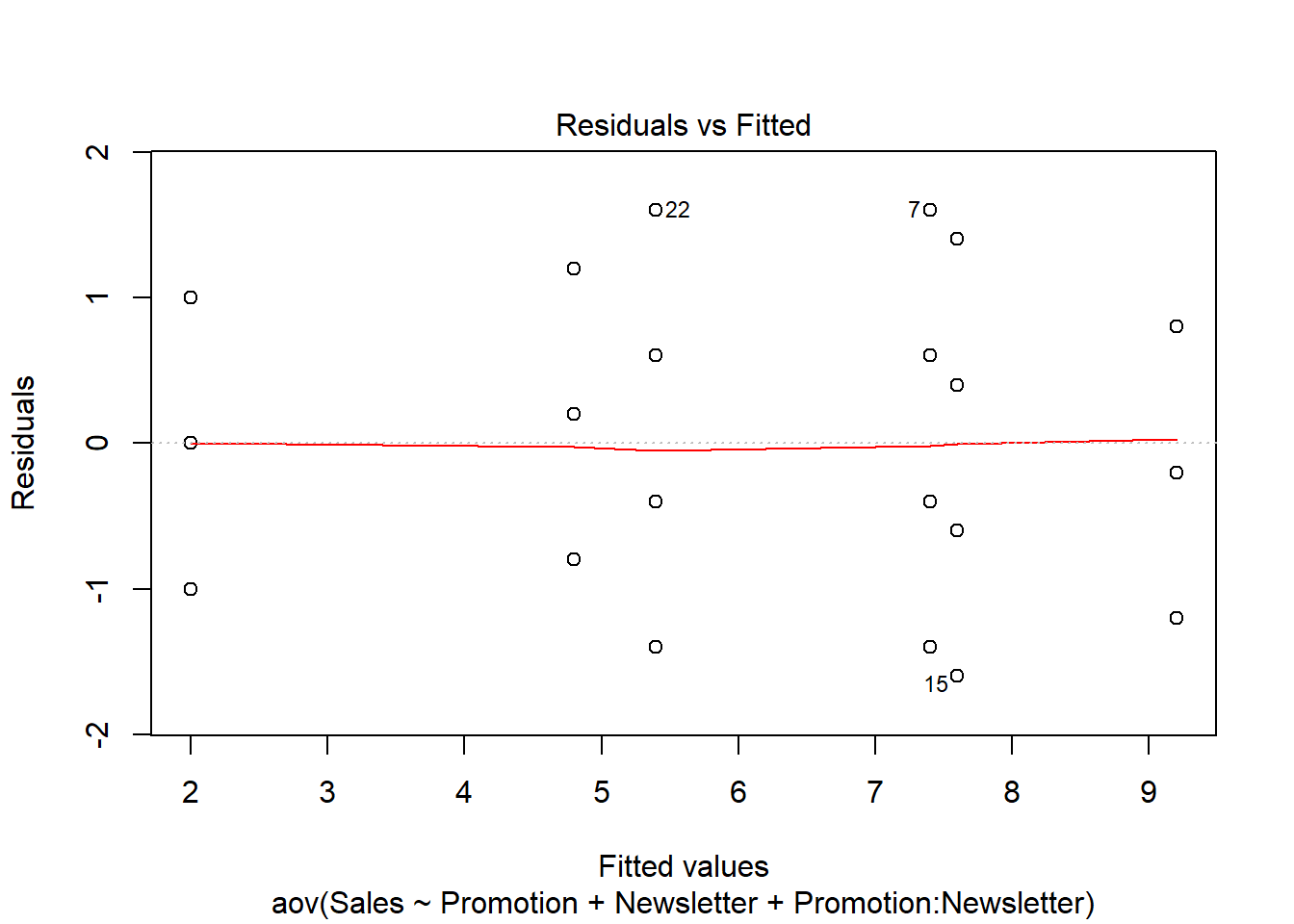

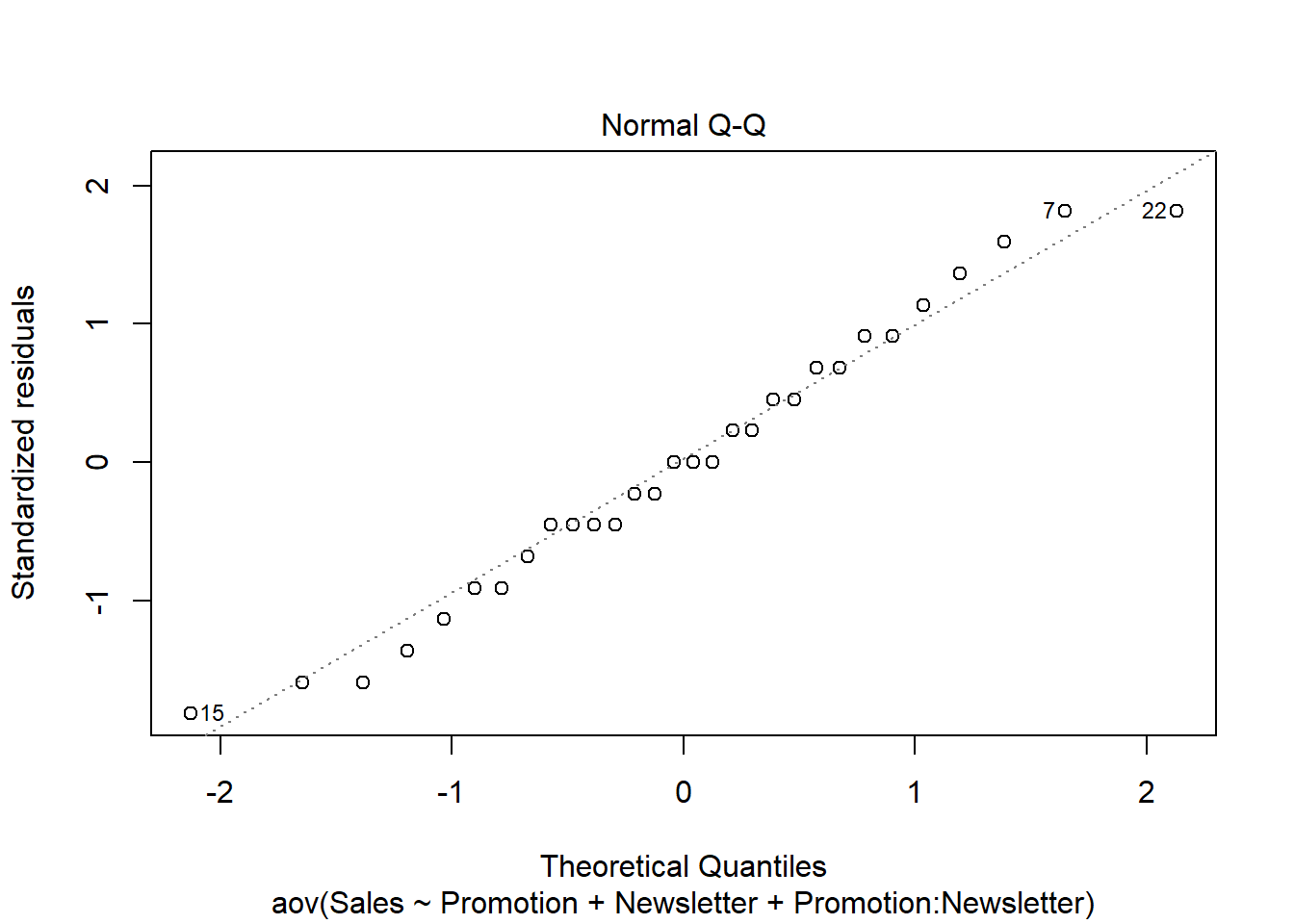

summary(aov)[[1]]$"Sum Sq"[4])## [1] 0.8751793As in the one-way ANOVA, we check the residuals plots generated by R to see if the residulas are approximately normally distributed and whether the residual variance is similar across groups.

plot(aov, 1) #homogeneity of variances

plot(aov, 2) #normal distribution of residuals

Reporting:

- There was a significant main effect of promotion on sales, F(2,24) = 53.03, p < 0.05.

- The post hoc tests based on Bonferroni and Tukey’s HSD revealed that the sales were significantly higher when using medium vs. low levels, high vs. medium levels, as well high vs. low levels of promotion.

- There was a significant main effect of newsletter features on sales levels, F(1,24) = 53.33, p < 0.05.

- The effect of each factor is independent of the other since the interaction effect between the level of promotion and direct mailing was insignificant, F(2,24) = 3.27, p > 0.05.

7.5 Non-parametric tests

When should you use non-parametric tests?

- When the dependent variable is measured at an ordinal scale and we want to compare more than 2 means

- When the assumptions of independent ANOVA are not met (e.g., assumptions regarding the sampling distribution in small samples)

The Kruskal–Wallis test is the non-parametric counterpart of the one-way independent ANOVA. It is designed to test for significant differences in population medians when you have more than two samples (otherwise you would use the Mann-Whitney U-test). The theory is very similar to that of the Mann–Whitney U-test since it is also based on ranked data. The Kruskal-Wallis test is carried out using the kruskal.test() function. Using the same data as before, we type:

kruskal.test(Sales ~ Promotion, data = online_store_promo)##

## Kruskal-Wallis rank sum test

##

## data: Sales by Promotion

## Kruskal-Wallis chi-squared = 16.529, df = 2, p-value = 0.0002575The test-statistic follows a chi-square distribution and since the test is significant (p < 0.05), we can conclude that there are significant differences in population medians. Provided that the overall effect is significant, you may perform a post hoc test to find out which groups are different. To get a first impression, we can plot the data using a boxplot:

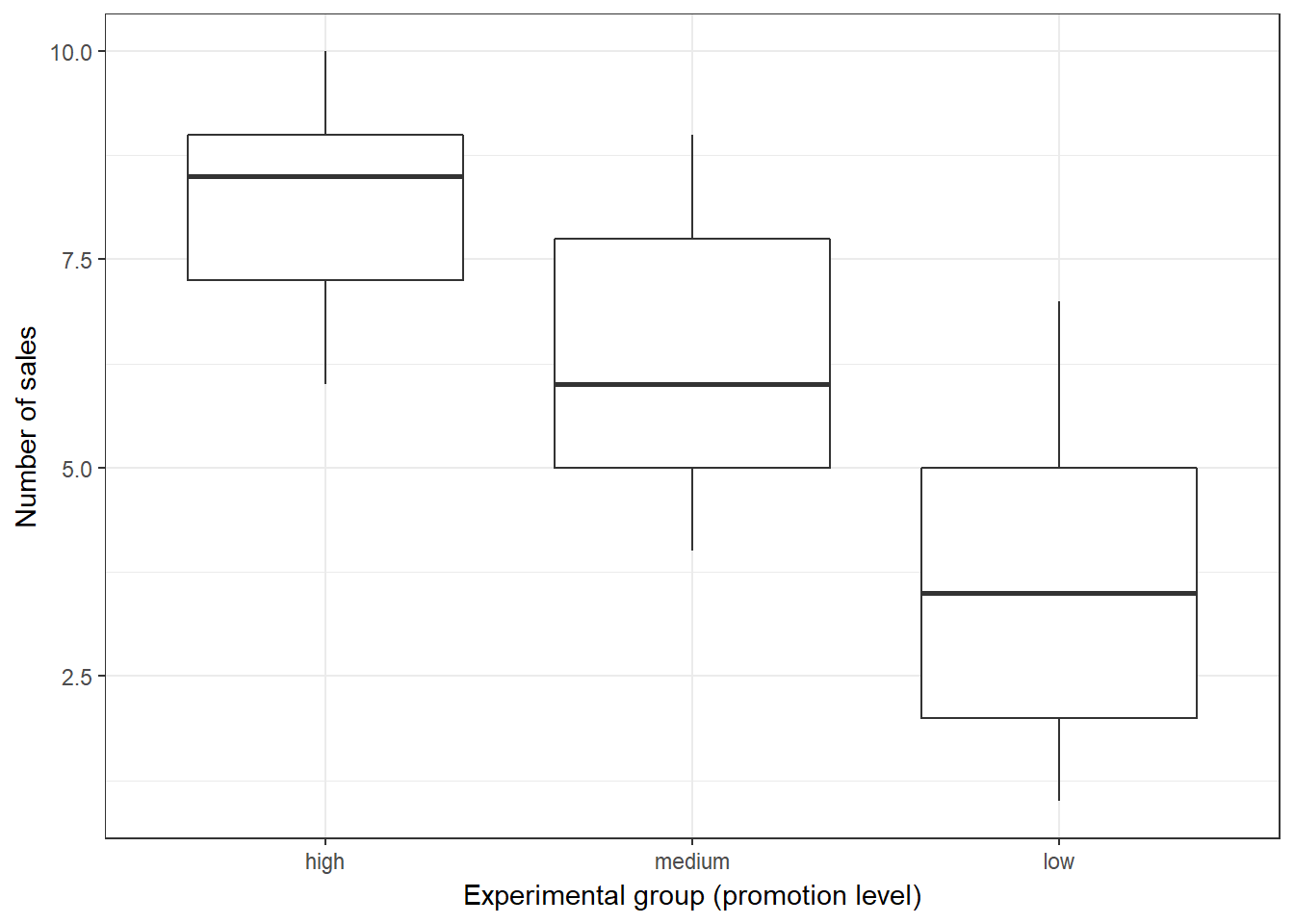

#Boxplot

ggplot(online_store_promo, aes(x = Promotion, y = Sales)) +

geom_boxplot() +

labs(x = "Experimental group (promotion level)", y = "Number of sales") +

theme_bw()

Figure 7.15: Boxplot

To test for differences between groups, we can, for example, apply post hoc tests according to Nemenyi for pairwise multiple comparisons of the ranked data using the appropriate function from the PMCMR package.

library(PMCMR)

posthoc.kruskal.nemenyi.test(x = online_store_promo$Sales,

g = online_store_promo$Promotion, dist = "Tukey")##

## Pairwise comparisons using Tukey and Kramer (Nemenyi) test

## with Tukey-Dist approximation for independent samples

##

## data: online_store_promo$Sales and online_store_promo$Promotion

##

## high medium

## medium 0.09887 -

## low 0.00016 0.11683

##

## P value adjustment method: noneThe results reveal that there is a significant difference between the “low” and “high” promotion groups. Note that the results are different compared to the results from the parametric test above. This difference occurs because non-parametric tests have more power to detect differences between groups since we loose information by ranking the data. Thus, you should rely on parametric tests if the assumptions are met.